To prepare an incident response runbook for AI apps you can implement tomorrow, focus on establishing clear roles, monitoring key metrics for anomalies, and defining immediate containment steps. Include procedures for evaluating root causes, managing data privacy, and notifying stakeholders transparently. Regularly test and update your plan to address new threats. If you keep exploring, you’ll discover how to build a resilient response strategy that keeps your AI systems secure and trustworthy.

Key Takeaways

- Establish clear detection protocols using monitoring tools, drift detection, and anomaly analysis to identify incidents early.

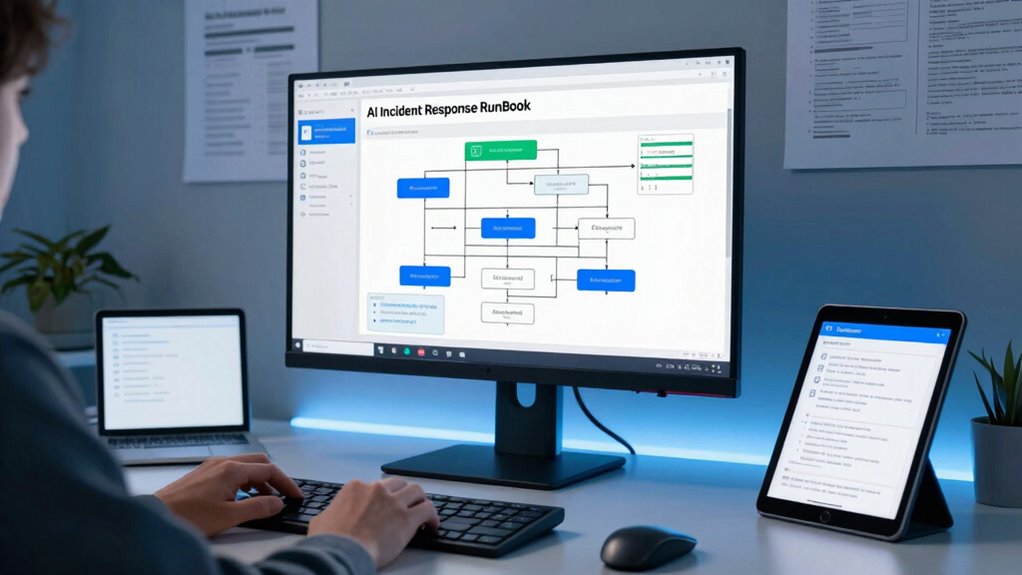

- Develop a detailed incident response runbook outlining roles, procedures, and escalation paths for swift action.

- Implement containment strategies such as isolating affected models and systems to prevent further damage.

- Conduct root cause analysis by reviewing logs, data inputs, and system configurations to address underlying issues.

- Ensure compliance and data privacy by prioritizing anonymization, adhering to regulations, and securing forensic evidence.

Why Incident Response Matters for AI Applications

Because AI applications are becoming integral to many critical systems, responding effectively to incidents is more important than ever. Swift response helps mitigate damage, but it also addresses ethical considerations, such as maintaining user trust and avoiding bias. When incidents occur, transparency and accountability become essential, ensuring users feel confident in the system’s integrity. Additionally, user education plays a essential role; informing users about AI limitations and safe practices helps reduce risks and fosters responsible use. An effective incident response plan demonstrates your commitment to ethical standards and keeps users informed, reducing panic or confusion during crises. Incorporating knowledge of projector technology can also improve the communication of technical issues to users, ensuring clarity and understanding. Understanding incident management strategies can further enhance your ability to prepare for and respond to AI-related issues efficiently, especially when combined with risk assessment methods. Furthermore, incorporating support breakfast principles, such as clear communication and preparedness, can foster a more resilient response environment. Building incident documentation processes can aid in tracking issues and improving future responses. Ultimately, being prepared not only minimizes harm but also sustains confidence in AI technologies as they become more embedded in everyday life.

Common AI Incidents and Their Causes

AI incidents can arise from a variety of causes, often rooted in design flaws, data issues, or operational errors. Poorly designed models may produce biased or unfair results, violating AI ethics and damaging trust. Data issues, such as contaminated, incomplete, or unrepresentative datasets, can lead to inaccurate or harmful outputs. Operational errors, like misconfigured systems or inadequate monitoring, can cause unexpected failures or security breaches. Additionally, a lack of user education about AI capabilities and limitations can result in misuse or misinterpretation of outputs, increasing incident risk. Recognizing these common causes helps you implement preventative measures and foster responsible AI practices, reducing the likelihood of incidents. Understanding the root causes is essential for effective incident response and for maintaining ethical standards in AI deployment. Regularly testing and Mastering the Art of Bug Out Bags ensures your team is prepared to respond swiftly to any system failure or security incident. Developing a strong understanding of software quality assurance principles can further help in identifying vulnerabilities early and maintaining system integrity.

How to Prepare Your Team With an AI Incident Response Plan

Preparing your team with a solid AI incident response plan is essential to minimize damage when incidents occur. Start by emphasizing ethical considerations, guaranteeing your team understands the importance of responsible AI use and decision-making during crises. Incorporate user education into your plan, so team members can effectively communicate with affected users and address concerns transparently. Train your staff regularly on incident procedures, focusing on immediate steps and ethical protocols. Clear documentation of response workflows ensures everyone knows their roles. By integrating ethical considerations and user education into your plan, you prepare your team to handle incidents confidently, maintain trust, and reduce potential harm. Understanding electric dirt bikes and their capabilities can also help inform safety protocols and incident prevention strategies. Additionally, considering European cloud innovation can enhance your organization’s resilience by leveraging secure and sustainable cloud solutions, ensuring data integrity and swift recovery. Exploring specialized cloud services tailored for AI applications can further optimize incident response and recovery times. This proactive approach keeps your organization resilient and ready for AI incidents before they escalate.

Building an Incident Response Team for AI Systems

Building an effective incident response team for AI systems requires careful selection of members with diverse expertise. You need specialists who understand AI ethics, bias mitigation, and technical troubleshooting. Consider including:

An effective AI incident response team combines expertise in ethics, bias, technical troubleshooting, and communication.

- Data scientists skilled in identifying bias and ethical issues, who can analyze data integrity and fairness to prevent future incidents. Incorporating AI ethics can help guide responsible decision-making and reinforce trustworthiness. Engaging in ethical frameworks ensures that ethical considerations are systematically integrated into incident response protocols.

- AI engineers to diagnose system failures quickly.

- Legal and compliance experts to navigate ethical and regulatory concerns.

- Communication specialists to coordinate internal and external messaging. Additionally, understanding ear wax odor and its variations can metaphorically inform how subtle signals in system behavior might indicate underlying issues, emphasizing the importance of detection and interpretation in incident response.

This diverse team ensures you can address incidents holistically, from technical flaws to ethical implications. Prioritizing AI ethics and bias mitigation helps prevent future issues and builds trust. Incorporating philosophical insights can deepen understanding of ethical dilemmas in AI development. Staying informed about AI-related careers can also help you identify emerging roles that support incident response efforts. Remember, a well-rounded team can respond faster and more effectively, reducing the impact of AI incidents and safeguarding your reputation.

Essential Components of an AI Incident Runbook

What are the key elements that make an incident runbook effective for AI systems? First, it should clearly outline procedures for identifying and responding to issues, including bias detection and mitigation strategies. Incorporate guidelines that address AI ethics, ensuring decisions align with fairness, transparency, and accountability. A well-structured runbook also details escalation paths and communication protocols to keep stakeholders informed. Include specific checklists for common incidents, like data leaks or model failures, and emphasize documentation for transparency and learning. Regularly updating the runbook guarantees it stays relevant as AI systems evolve. Additionally, understanding the intricacies of each type and model can help tailor response procedures effectively. Recognizing the complexities involved in bias mitigation is crucial for maintaining ethical standards and ensuring trustworthy AI deployment. Incorporating environmental considerations such as vetted methods for wave and wind can further enhance incident response strategies, especially in AI applications sensitive to environmental factors. A comprehensive runbook should also consider system dependencies to better understand how interconnected components impact incident management. Ultimately, an effective runbook streamlines response efforts, minimizes risks, and promotes responsible AI use by embedding bias mitigation and ethical considerations into your incident management process.

How to Detect Model Drift and Performance Issues

Detecting model drift and performance issues is crucial for maintaining the reliability of your AI systems. Effective model monitoring helps you spot changes that could degrade accuracy or lead to failures. To do this, focus on these key actions:

Regular monitoring of model performance is essential to detect drift and maintain AI reliability.

- Track key performance metrics over time. Incorporating connected equipment data can provide real-time insights into model health and user interactions.

- Use drift detection tools to identify shifts in data distribution. Employing data distribution analysis techniques can help highlight subtle changes that may impact model performance.

- Compare current model outputs against baseline results. Regular benchmarking ensures that your model remains aligned with expected behaviors.

- Set automated alerts for significant deviations. These notifications enable prompt investigation and response.

- Incorporate monitoring best practices from the latest AI maintenance strategies to ensure comprehensive oversight. Staying updated with model maintenance techniques keeps your system robust against unexpected issues. Additionally, leveraging real-time analytics tools can further enhance your ability to detect anomalies promptly.

Implementing these steps guarantees you catch issues early before they impact users. Regular drift detection and performance analysis help you maintain model integrity. By staying proactive, you can address problems swiftly and keep your AI system running smoothly. Remember, continuous monitoring is your best defense against unseen model degradation.

Identifying Data Leaks and Security Breaches in AI Systems

You need to stay alert for signs of data leaks and security breaches in your AI systems. Monitoring for data anomalies helps you spot unusual patterns that may indicate a leak, while observing unauthorized access indicators reveals potential security threats. Acting quickly on these clues is essential to protect sensitive information and maintain trust. Incorporating automated detection tools can further enhance your ability to identify and respond to threats in real-time. Staying informed about cybersecurity best practices ensures you are prepared to address emerging vulnerabilities proactively. Regularly reviewing your network infrastructure helps identify potential entry points for attackers and strengthens your defenses.

Data Anomaly Detection

Data anomaly detection plays a crucial role in safeguarding AI systems by identifying unusual patterns that may indicate data leaks or security breaches. When you monitor for anomalies, you can spot signs of malicious activity early. To enhance effectiveness, focus on:

- Establishing baseline data behaviors for accurate detection.

- Using advanced algorithms to flag deviations.

- Implementing false positive management to reduce unnecessary alerts.

- Regularly updating detection models with new threat insights. Understanding data security principles can further improve your anomaly detection strategies, especially when considering threat detection techniques that align with best practices.

Unauthorized Access Indicators

Unusual access patterns often signal potential security breaches or data leaks within AI systems. As you monitor your system, look for indicator detection signs like repeated failed login attempts, access from unfamiliar IP addresses, or access outside normal hours. Unauthorized access may also manifest through unusual data requests or sudden spikes in data retrieval activity. These indicators help you quickly identify potential breaches before they escalate. By establishing clear thresholds and automated alerts for suspicious activity, you can respond swiftly. Regularly review access logs and cross-reference them with user activity to spot anomalies. Recognizing these signs early enables you to contain threats, revoke compromised credentials, and prevent further data leaks or security breaches. Implementing security best practices and maintaining an ongoing monitoring process are crucial for early detection. Prompt indicator detection is essential for maintaining your AI system’s integrity.

Troubleshooting AI System Outages and Failures Quickly

When your AI system experiences an outage, spotting the symptoms early is vital to minimize downtime. You then need to isolate the root cause quickly to prevent the issue from spreading or recurring. Once identified, restoring system function efficiently guarantees your AI app resumes normal operation with minimal disruption.

Detecting Outage Symptoms

How can you quickly identify signs that an AI system is experiencing an outage? Start by monitoring key indicators such as sudden drops in model interpretability, which suggests the model isn’t functioning correctly. Then, pay attention to user feedback; increased complaints or reports of inaccurate results often point to issues. Additionally, irregularities in data input streams or processing delays signal potential problems. Keep an eye on performance metrics—if they deviate sharply from normal, trouble may be brewing. Lastly, check system logs for error messages or failures that could explain the outage. By staying alert to these signs, you can respond swiftly and minimize downtime, ensuring your AI system remains reliable and effective.

Isolating Root Causes

To effectively troubleshoot an AI system outage, you need to quickly pinpoint the root cause by systematically analyzing available data. Begin by reviewing logs from your neural network, focusing on recent errors or anomalies during data preprocessing. Check if data inputs were corrupted, incomplete, or inconsistent, as issues here often cascade into model failures. Examine your data pipeline to identify bottlenecks or processing errors that could have disrupted the model’s performance. Look for patterns indicating whether the problem stems from data quality, model parameters, or infrastructure. Isolating the root cause involves cross-referencing system alerts with data preprocessing steps and neural network outputs. This targeted analysis helps you identify the specific failure point, enabling faster resolution and minimizing system downtime.

Restoring System Function

Rapidly restoring system function after an AI outage requires a structured approach to implement fixes swiftly and prevent extended downtime. To guarantee effective system restoration, follow these steps:

- Assess the impact to identify affected components and prioritize recovery efforts.

- Implement quick fixes like restarting services or rolling back recent updates.

- Perform data recovery by restoring corrupted or lost data from backups to maintain system integrity.

- Verify system stability through testing to confirm full functionality and prevent recurrence.

How to Contain and Mitigate AI Incidents to Minimize Damage

When an AI incident occurs, swift containment and mitigation are crucial to limit its impact. Prioritize isolating affected systems to prevent further damage. Communicate transparently with stakeholders, emphasizing your commitment to AI ethics and algorithm transparency. Use the following table to identify key actions:

| Action | Purpose |

|---|---|

| Disable or isolate models | Prevent spread of errors or bias |

| Notify stakeholders | Maintain trust and transparency |

| Review logs and data | Detect root causes quickly |

| Implement temporary fixes | Reduce immediate harm |

| Document actions | Ensure accountability and learning |

Focusing on transparency and ethical considerations guides your containment, minimizing damage while maintaining trust.

Conducting Root Cause Analysis After an AI Incident

Conducting a root cause analysis after an AI incident is essential for understanding what went wrong and preventing future issues. It helps uncover underlying problems and guides corrective actions. To conduct an effective analysis, consider these steps:

Conducting a root cause analysis is vital for understanding failures and preventing future AI incidents.

- Gather data from all relevant teams, promoting cross team collaboration.

- Examine the incident’s context, including model inputs, training data, and deployment environment.

- Identify any ethical considerations that may have influenced the outcome.

- Document findings clearly, emphasizing systemic issues over individual errors.

Prioritize transparency and ethical considerations throughout the process. Engaging diverse perspectives ensures a thorough understanding and fosters accountability. This detailed review not only helps fix immediate problems but also strengthens your organization’s AI governance and resilience against future incidents.

Communicating Transparently During an AI Incident

After completing a root cause analysis, maintaining open and honest communication becomes essential during an AI incident. Your goal is to provide transparency communication that reassures stakeholders and keeps them informed. Regular stakeholder updates show you’re actively managing the situation and prevent misinformation. Be clear about what you know, what you’re doing to address the issue, and what you don’t yet know. Avoid speculation or downplaying the problem, as honesty builds trust. Use simple, accessible language, and tailor your messages to different audiences. Transparency fosters confidence and demonstrates your commitment to resolving the incident responsibly. Remember, timely and truthful communication helps manage expectations and supports collaborative problem-solving during critical moments.

Restoring AI System Functionality and Building Trust

Restoring AI system functionality quickly and effectively is essential to minimizing disruption and maintaining stakeholder confidence. To do this, focus on key steps:

- Prioritize transparent communication about what went wrong and your remediation plan, reinforcing model transparency.

- Conduct a thorough analysis of the incident, ensuring your actions align with AI ethics principles.

- Implement corrective measures, such as model retraining or adjustments, to prevent recurrence.

- Reassure stakeholders by sharing progress updates, demonstrating your commitment to ethical AI and system reliability.

Building trust requires demonstrating your dedication to responsible AI practices, emphasizing model transparency, and addressing any ethical concerns. When stakeholders see your proactive approach, confidence in your AI system’s integrity and reliability will grow.

Post-Incident Reviews: Lessons and Prevention Strategies

Once you’ve addressed the immediate issues and communicated transparently with stakeholders, it’s time to analyze the incident thoroughly. Conduct incident postmortems to identify root causes, gaps, and decision points. Use this analysis to develop robust prevention strategies, reducing the chance of recurrence. Document lessons learned and share them across your team to foster continuous improvement. To help visualize key insights, consider this example:

| Incident Cause | Root Cause | Prevention Strategy |

|---|---|---|

| Data bias | Insufficient testing | Regular audits |

| Model drift | Lack of monitoring | Continuous validation |

| Security flaw | Inadequate safeguards | Strengthen security measures |

| Communication gap | Poor stakeholder updates | Improve communication protocols |

| Algorithm error | Coding oversight | Code reviews and testing |

Regular post-incident reviews ensure your AI system becomes more resilient over time.

Automating Detection and Response for AI Incidents

Automating detection and response for AI incidents is essential to minimizing downtime and mitigating risks before human intervention becomes too slow. To do this effectively, you need systems that monitor key indicators like model interpretability and bias mitigation signals. Consider these steps:

- Implement real-time monitoring tools that flag anomalies or unexpected model behaviors.

- Use automated alerts triggered by deviations in interpretability metrics.

- Deploy response scripts that automatically adjust models or halt processes when bias or errors are detected.

- Integrate machine learning-based detection to identify subtle bias patterns or interpretability issues early.

These measures enable swift intervention, reducing incident impact and maintaining trust in your AI system. Automation ensures consistent, prompt responses, keeping your AI resilient and aligned with fairness standards.

Managing Data Privacy and Compliance During Incidents

Effective incident response for AI systems requires not only rapid detection and mitigation but also strict adherence to data privacy and compliance standards. During an incident, prioritize data anonymization to protect sensitive information, reducing the risk of exposure. Ensure that any data accessed or shared complies with privacy regulations like GDPR or CCPA. You should also verify that logs and forensic data are properly anonymized before analysis. Maintain clear documentation of your actions to demonstrate privacy compliance and facilitate audits. Communicate transparently with stakeholders about the steps taken to protect user data. By integrating data anonymization techniques and adhering to privacy standards, you minimize legal risks and uphold your organization’s commitment to responsible data handling during incident response.

Best Practices for Testing Your AI Incident Response Plan

Testing your AI incident response plan regularly is essential to guarantee your team can respond swiftly and effectively when an incident occurs. To make certain your plan’s robustness, focus on these best practices:

- Conduct simulation exercises that mimic real incident scenarios, revealing gaps and improving response times.

- Prioritize clear team communication protocols to ensure everyone understands their roles during an incident.

- Review and update your plan after each test to address newly identified vulnerabilities.

- Involve cross-functional teams to foster collaboration and diverse perspectives during testing.

Tools and Resources for AI Incident Management

Having a solid incident response plan is only part of the equation; leveraging the right tools and resources guarantees your team can act swiftly when an AI incident occurs. Utilize monitoring platforms that track AI performance and detect anomalies early, helping you uphold AI ethics by catching biases or harmful outputs. Incorporate user feedback mechanisms to gather real-time insights, enabling quick identification of issues that might not be immediately obvious. Incident management tools like automated alert systems and logs streamline your responses, ensuring swift containment and resolution. Access to all-encompassing dashboards and documentation keeps everyone aligned and informed. These resources empower your team to address incidents efficiently, uphold ethical standards, and maintain user trust during critical moments.

Keeping Your AI Incident Response Plan Up to Date

You need to regularly review and update your incident response plan to address evolving AI threats. Incorporate insights from recent incidents and emerging vulnerabilities to keep it effective. Additionally, keep your team trained on the latest procedures to guarantee everyone responds swiftly and correctly.

Regular Plan Reviews

How often should your AI incident response plan be reviewed? Regular reviews are essential to keep your incident plan effective. You should establish a consistent review schedule, such as quarterly or biannual checks. During each review, consider these key steps:

- Evaluate recent incident handling effectiveness.

- Update contact lists and escalation procedures.

- Incorporate lessons learned from past incidents.

- Adjust for new AI models or integrations.

A well-maintained review schedule ensures your incident plan stays relevant amid evolving threats and technology. Skipping reviews can leave gaps in your response, increasing risks during an incident. By proactively refining your plan, you’re better prepared when an incident occurs, minimizing damage and recovery time. Keep your review schedule strict and thorough to stay ahead of potential vulnerabilities.

Incorporate New Threats

As AI technology evolves, new threats emerge that can compromise your systems and data. To stay ahead, regularly update your incident response plan to address these risks. Focus on AI ethics and model transparency, ensuring your team understands potential biases and vulnerabilities. Incorporate the latest research on malicious AI attacks and data poisoning techniques. Evaluate whether your current measures can detect and respond to these emerging threats effectively. Remember, transparency about your models helps identify weaknesses early, reducing response time. Keeping your plan current means staying informed about evolving AI threats and integrating best practices for ethical AI deployment. This proactive approach minimizes damage and reinforces trust with users and stakeholders. Regular updates are essential to adapt quickly and maintain a robust incident response.

Team Training Updates

Staying ahead of emerging AI threats requires more than just updating policies; it means guaranteeing your team is well-trained on the latest risks and response techniques. Regular team training updates strengthen team dynamics and improve incident documentation, making your response more effective. To stay current, consider:

- Conducting quarterly training sessions focused on new AI vulnerabilities.

- Reviewing recent incident documentation to identify gaps in response.

- Updating training materials based on lessons learned from past incidents.

- Running simulated AI incident drills to reinforce response protocols.

These steps help keep everyone aligned, improve decision-making during crises, and ensure your team is prepared for evolving threats. Consistent training fosters a proactive incident response culture, reducing response time and minimizing damage.

Frequently Asked Questions

How Often Should an AI Incident Response Plan Be Reviewed?

You should review your AI incident response plan at least quarterly to stay current. Regular reviews guarantee you incorporate recent AI model updates and maintain accurate incident documentation. This helps you adapt to new threats, improve response strategies, and keep your team prepared. Additionally, after any significant AI model update or security incident, revisit and adjust the plan immediately to address any new vulnerabilities or procedural gaps.

What Are Common Signs Indicating an Impending AI Incident?

Like a canary in a coal mine, you should watch for unusual AI behaviors that signal trouble. Common signs include sudden drops in model accuracy, unexpected outputs, or anomalies detected through ai anomaly detection and model drift monitoring. These indicators suggest your AI system may be heading toward an incident, prompting you to investigate early and prevent potential damage. Stay vigilant and act swiftly to keep your AI running smoothly.

How Can Small Teams Effectively Manage AI Incident Response?

You can effectively manage AI incident response by establishing clear protocols focused on AI ethicality and data privacy. Assign roles within your small team, prioritize swift communication, and monitor AI outputs regularly. Implement automated alerts for potential issues, and guarantee everyone knows how to escalate concerns quickly. Training your team on AI ethicality and data privacy helps prevent incidents and reduces response time, keeping your AI systems safe and trustworthy.

What Training Is Recommended for AI Incident Response Team Members?

Are you prepared to handle AI bias or data poisoning? You should train team members on recognizing AI vulnerabilities, including bias detection and mitigation, and identifying signs of data poisoning. Focus on technical skills like model monitoring, debugging, and secure data handling. Also, emphasize understanding AI ethics and legal considerations. Regular simulations and updates keep your team sharp, ensuring they respond confidently when incidents arise.

How Do Legal Considerations Impact AI Incident Containment Strategies?

Legal considerations heavily influence your AI incident containment strategies by emphasizing legal compliance and liability management. You must act swiftly to contain issues while adhering to regulations like data privacy laws, ensuring you don’t breach legal boundaries. Balancing rapid incident response with legal obligations helps protect your organization from liability, fines, or reputation damage, making it essential to integrate legal counsel into your containment planning and decision-making processes.

Conclusion

Now that you know how to prepare an AI incident response runbook, are you ready to act swiftly and confidently when an incident occurs? Implementing these strategies guarantees you’re equipped to handle disruptions effectively, safeguarding your AI systems and data. Don’t wait until a crisis hits—start building your plan today. After all, proactive preparation can make the difference between chaos and control in AI incident management. Are you prepared to take that vital step?