Ozone 9 All-In-One Mastering App

Ozone 9 Advanced and Standard has been released. I am using Ozone 9 Advanced in combination with other plugins for mastering and mixing for years. If you can take advantage of the available upgrade prices.

- Ozone 9 Advanced: Upgrade from Ozone 9 Standard (Affiliate Link)

- Ozone 9 Advanced: Upgrade from Ozone 5-8 Standard (Affiliate Link)

- Ozone 9 Advanced: Upgrade from Ozone 5-8 Advanced (Affiliate Link)

- Ozone 9 Advanced: Upgrade from Ozone Elements 7-9 (Affiliate Link)

- Ozone 9 Advanced: Upgrade from paid versions of Ozone Elements 7-9

Building on a 17-year legacy in audio mastering, Ozone 9 brings balance to your music with never-before-seen processing for the low end, real-time instrument separation, and lightning-fast workflows powered by machine learning. Find the perfect vibe with an expanded Master Assistant that knows exactly what you’re going for, whether it’s a warm analog character or transparent loudness for streaming. Talk to more iZotope plug-ins in your session with Tonal Balance Control and blur the lines between mixing and mastering. Work faster with improved plug-in performance, smoother metering, and resizable windows. No more wondering if your music is ready for primetime—with Ozone 9, the future of mastering is in your hands.

Ozone to Prepare your Tracks for Streaming

Get your music ready for prime time in today’s world of streaming audio. Set intelligent loudness targets to prevent your music from being turned down by a streaming platform with Master Assistant and Maximizer. Use CODEC Preview mode in Ozone 9 Advanced to hear your music translated to MP3 or AAC. Upload a reference track to Tonal Balance Control, Master Assistant, or EQ Match, and ensure your music stacks up against the competition. Create with confidence knowing your music will sound great in any format!

Availability and Pricing

Special pricing on Ozone 9 is available in many shops, also here at special pricing.I got myself an Ozone 9 Advanced (Affiliate Link). Ozone is available in three different editions designed to meet any budget or mixing need (Compare those versions here). I would recommend selecting the advanced version if you do have to prepare your own tracks for streaming be it music or for YouTube.

Ozone 9 Upgrades & Crossgrades:

- Ozone 9 Advanced: Upgrade from Ozone 9 Standard (Affiliate Link)

- Ozone 9 Advanced: Upgrade from Ozone 5-8 Standard (Affiliate Link)

- Ozone 9 Advanced: Upgrade from Ozone 5-8 Advanced (Affiliate Link)

- Ozone 9 Advanced: Upgrade from Ozone Elements 7-9 (Affiliate Link)

- Ozone 9 Advanced: Upgrade from paid versions of Ozone Elements 7-9 (Affiliate Link)

- Ozone 9 Advanced: Crossgrade from ANY paid iZotope product including Exponential Audio products (Affiliate Link – Limited Time Crossgrade – Ends Oct 31)

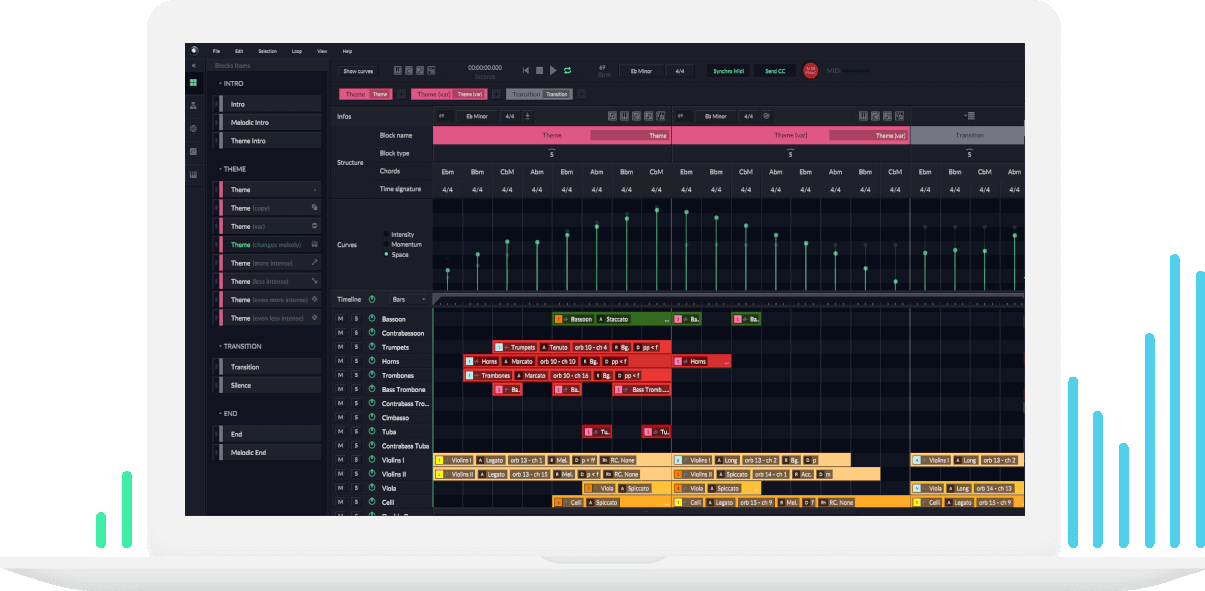

Master Rebalance

Master Rebalance gives you the final say in a mix, letting you correct and change the levels of instruments without the need for the original tracks. Give your vocals a boost to add presence without affecting the midrange, eliminate muddy low end by slightly lowering the bass guitar, or correct weak-sounding drums with one small boost that can save you from hours of tedious EQ surgery. You can even automate the desired source gain in your DAW of choice, this means amplifying vocals only during your hook or making sure the bass really drops when it’s time to drop.

Low-End Focus

Low-End Focus is your first line of defense against a muddy or blurry low end in a mix. This industry-first bass-shaping tool is designed to enhance bass sounds, and bring clarity to your low end without incurring artifacts.

With one move on the Punchy setting, iZotope’s Ozone can immediately boost the transient power of a drum kit. Or, with the Smooth setting engaged, you can easily bring a hidden bass guitar into view, adding warmth and definition to the low end.

Expanded Master Assistant

Whether you want a warm analog character or transparent loudness for streaming, the improved Master Assistant uses machine learning to generate a custom preset in seconds. Use the new Vintage mode to quickly add the right combination of color and character, with automatic adjustments for Vintage Compressor, EQ, and more.

Master Assistant also has an updated interface, with new adjustable intensity settings for streaming and CD. Or, have Master Assistant automatically fit your track to your favorite reference track with Reference Mode.

Increased Tonal Balance Control Functionality

Ozone 9 brings the tone and vibe of your favorite artists to your music with the upgraded Match EQ module. No longer confined to Ozone’s Equalizer, you can now use Match EQ anywhere in your session. Match to any reference track with incredible accuracy, with an EQ that applies over 8,000 separate bands to get the most precise snapshot possible. New region parameters give you more control over the end result by letting you choose what parts of the audio to match. Capture a reference from a track in your session, or a reference file loaded into Ozone 9, and save your favorite results as presets for easy access.

Improved Ozone Imager

Turn narrow, dull audio into a full, impactful panoramic track with the improved Imager in Ozone 9. With the new “Stereoize II” mode, you’re able to add a more natural, transparent width to shape your soundstage. Sculpt your image and get control of super-wide bass, harsh cymbals, and more. For surgically sculpting your image, or if you just want to blow it up, Imager is a go-to for any mastering session.

NKS Support

NKS support puts the power of Ozone into your creative process, letting you master while making music with Maschine or Komplete Kontrol. Open Ozone on the fly and easily add professional polish while making music on your hardware, using hundreds of different presets and accessible parameters mapped to your hardware controls. Add loudness, width, and EQ without touching your DAW and keep the creative juices flowing.

Improved performance and UI

Expect lower CPU usage and shorter startup times with Ozone 9 compared to Ozone 8. With fluid metering in a fully resizable environment, you can track the most subtle details of your audio. Use more plug-ins at once, mix while you master without worrying about slowdowns or dropouts, and immerse yourself in a smooth, modern experience designed to keep you in your creative flow.

![Ozone 9 All-In-One Mastering App Adds More AI-Based Features 7 SOUND FORGE Audio Studio 16 - The complete solution for recording, audio editing, restoration and mastering in one | Audio Software | Music Program | for Windows 10/11 [PC Online code]](https://m.media-amazon.com/images/I/41IasYLiIkL._SL500_.jpg)

SOUND FORGE Audio Studio 16 – The complete solution for recording, audio editing, restoration and mastering in one | Audio Software | Music Program | for Windows 10/11 [PC Online code]

The all-in-one audio editor: Everything you need to record, edit, restore and master audio.

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

MasterKey – The Finest Music Transposing Tool. Easily Transpose Notes and Chords to Any Key with No Mistakes!

Join the Fun! MasterKey takes the mystery out of music theory!

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

Kylazer 71000-A Direct Plug-in Rechargeable Handle with a Voltage of 3.5V | Compatible with All Welch Allyn 3.5V Instrument Heads

Built with chrome-plated brass for durability and long-lasting reliability.

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

SoundTools XLR Sniffer/Sender – Remote End Cable Tester for XLR & 3-Pin DMX Cables – Fast, Reliable & Pocket-Sized Audio Cable Tester – Quick Diagnostic Tool for Stage, Studio & Live Sound

XLR & 3-PIN DMX CABLE TESTER: Professional microphone cable tester for XLR mic lines and 3-pin DMX cables….

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.