KV cache offloading uses specialized hardware like FPGAs or network interfaces to shift caching tasks from software, boosting performance and reducing latency. Techniques include hardware acceleration for lookups, updates, and invalidations, which can improve responsiveness in high-demand systems. However, trade-offs involve increased costs, system complexity, and potential compatibility issues. If you explore further, you’ll discover how these solutions balance performance benefits with operational considerations for ideal implementation.

Key Takeaways

- Techniques include hardware acceleration using FPGA, ASICs, or high-performance network interfaces to optimize cache operations.

- Offloading improves latency and throughput but introduces complexity in maintaining cache consistency and coherence.

- Trade-offs involve higher costs, system complexity, and potential compatibility issues with existing infrastructure.

- Hardware support enables custom caching protocols and reduces CPU overhead, enhancing responsiveness in demanding workloads.

- Strategic implementation balances performance benefits with costs, complexity, and reliability considerations for effective KV cache offloading.

KV cache offloading is a technique that enhances system performance by transferring caching responsibilities from primary storage to dedicated offload engines or external systems. When you implement this approach, you delegate the cache management workload, freeing up resources on your main storage system. This shift can markedly reduce latency and improve throughput, especially in high-demand environments. However, it also introduces some complexity, primarily around consistency challenges. Since the cache is now managed externally, ensuring that data remains synchronized between the primary storage and the offloaded cache becomes paramount. Any lag or mismatch can lead to stale reads or data integrity issues, which might compromise application correctness. To mitigate this, systems often employ sophisticated synchronization protocols, but these can add overhead and complexity to the overall architecture. Additionally, understanding the refresh strategies used in cache management is critical for maintaining data consistency and system reliability.

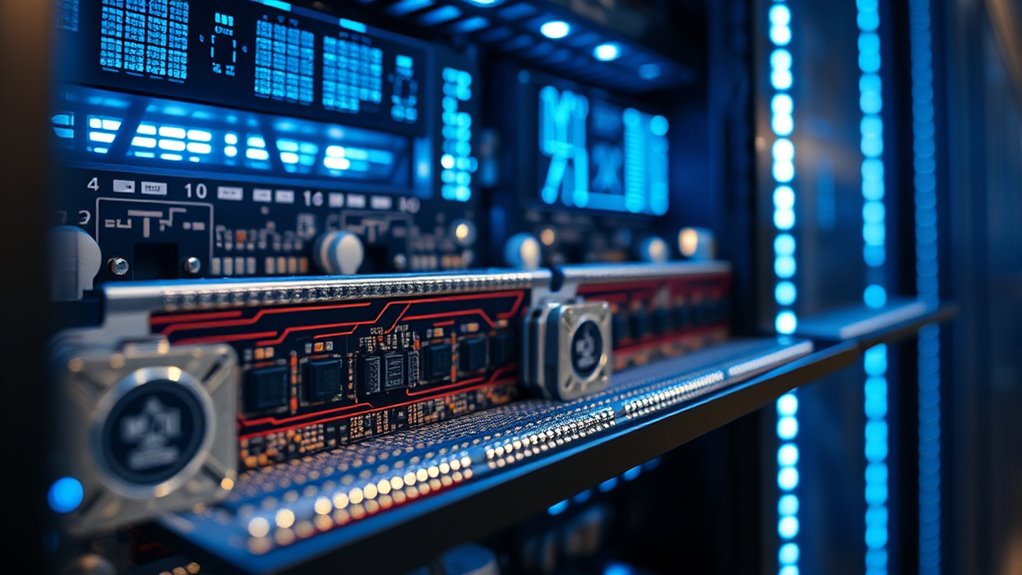

Hardware acceleration plays an essential role in making KV cache offloading feasible and effective. By leveraging specialized hardware—like FPGA, ASIC, or high-performance network interfaces—you can optimize cache management tasks such as lookups, updates, and invalidations. Hardware acceleration reduces the latency involved in these operations, making offloaded caches more responsive and closer in performance to in-memory caches. This is particularly important when dealing with high throughput workloads, where software-based solutions might struggle to keep pace. With hardware support, you also gain the ability to implement custom caching algorithms or consistency protocols that are tightly integrated with the hardware, further boosting efficiency. Additionally, hardware acceleration can help offload computationally intensive tasks, such as encryption or data integrity checks, thereby freeing up CPU cycles for other critical functions.

Despite these advantages, integrating hardware acceleration into KV cache offloading isn’t without trade-offs. You need to think about costs, as dedicated hardware can be expensive and may require specialized expertise to deploy and maintain. Compatibility is another issue; not all systems support the same hardware interfaces, which can limit your options or increase integration complexity. Moreover, the added complexity of hardware-based solutions can make troubleshooting and updates more challenging, especially when dealing with synchronization issues or hardware failures. Yet, if implemented thoughtfully, hardware acceleration combined with offloading can deliver a highly scalable, low-latency caching layer that handles demanding workloads efficiently. Ultimately, balancing the benefits of improved performance and responsiveness against the costs and complexity of maintaining hardware support is essential in designing an effective KV cache offloading strategy.

Frequently Asked Questions

How Does KV Cache Offloading Impact Latency?

KV cache offloading generally reduces latency by increasing cache hit rates, so you experience faster data retrieval. When offloading is effective, it minimizes the need to access slower main memory or storage, leading to quicker responses. This process smooths out delays, especially under high load, making your system more responsive. As a result, you notice improved performance and lower latency, enhancing your overall user experience.

What Are the Common Hardware Platforms Supporting Offloading?

You’ll find hardware platforms like GPUs, FPGAs, and SmartNICs leading the charge in supporting offloading. These devices act as vigilant gatekeepers, managing cache coherence and accelerating data flows. Hardware accelerators work like turbochargers, boosting performance and reducing latency. By offloading cache management tasks to these platforms, you guarantee smoother data handling—making your system faster, more efficient, and ready to handle high-demand workloads with ease.

Can Offloading Improve Cache Consistency?

Yes, offloading can improve cache consistency by reducing the overhead of maintaining cache coherence. With hardware support for invalidation protocols, offloading guarantees that data stays synchronized across caches efficiently. You benefit from faster invalidation and consistency checks, minimizing stale data issues. This results in smoother performance and more reliable data management, especially in systems with high concurrency, where maintaining cache coherence is critical for overall stability.

What Security Concerns Arise With Offloaded Caches?

When offloading caches, you should consider security concerns like data privacy and access control. Offloaded caches may expose sensitive data to unauthorized access if proper encryption isn’t in place or access controls are weak. You need robust security measures to protect data confidentiality, prevent data breaches, and ensure only authorized users access cached information. Without these safeguards, offloaded caches can become vulnerable points in your system’s security.

How Does Offloading Affect System Power Consumption?

Offloading caches can increase your system’s power consumption, impacting energy efficiency. As offloaded caches require additional hardware and data transfer, they generate more heat, making thermal management more critical. You might notice higher energy use and potential cooling needs, which could reduce overall efficiency. To mitigate this, optimize hardware and offloading techniques to balance performance gains with power management, ensuring your system remains energy-efficient and thermally stable.

Conclusion

As you navigate the landscape of KV cache offloading, picture yourself steering a sleek ship through turbulent digital seas. Each technique and hardware support acts like a sturdy sail or a guiding compass, helping you balance performance and complexity. By choosing wisely, you can sail smoothly toward efficient, scalable systems—where data flows seamlessly like a calm river, and your infrastructure stands resilient against the stormy demands of modern workloads.