Structured abstract

Background. The paper makes a bold claim—that video models are zero‑shot learners and reasoners—and supplies qualitative and quantitative evidence. Yet capability ≠ reliability: prompting, evaluation frames, and system composition (LLM rewriter) strongly shape outcomes. Video models are zero-shot lear…

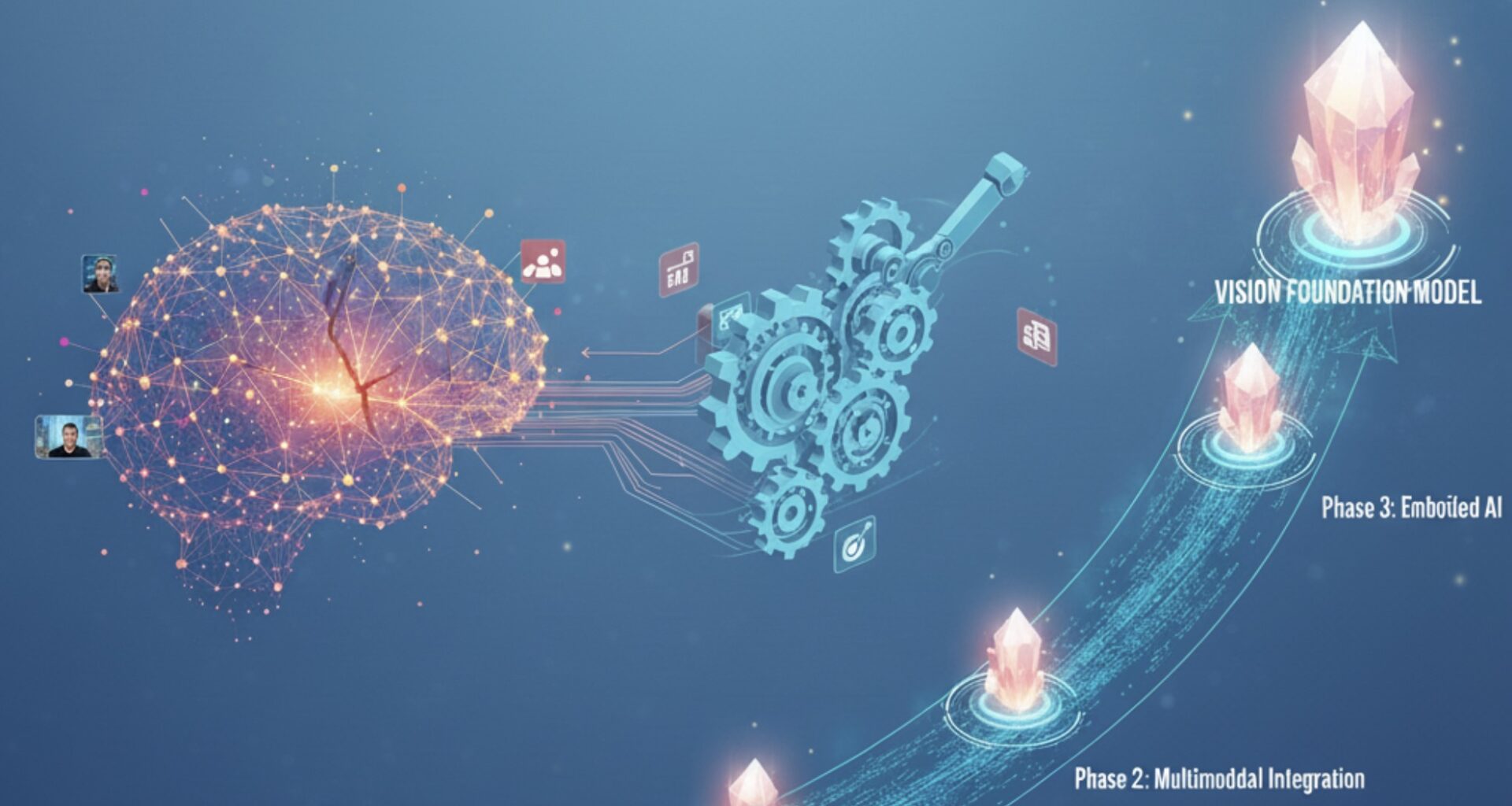

Objective. To analyze where current models succeed/fail, why evaluation can under‑ or over‑state capability, and how to steer research toward controllable, dependable vision foundation models. Video models are zero-shot lear…

Methods. Secondary synthesis of the paper’s design (Fig. 1 p. 2; Methods pp. 2–3), benchmarks (Figs. 3–9 pp. 5–9; Table 1 p. 31), prompt‑sensitivity analysis (Table 2 p. 40), and failure set (Sec. D pp. 42–46), with attention to system composition (LLM prompt rewriter; p. 2) and best‑ vs last‑frame metrics. Video models are zero-shot lear…

Results. (1) Prompting is a first‑class lever—both textual and visual context (e.g., green‑screen prior in segmentation; Fig. 4 p. 6) can swing results dramatically (up to 64‑point pass@1 deltas on symmetry; Table 2 p. 40). (2) Attribution is blurry because an LLM rewriter sits in front of the generator; the authors mitigate this by showing standalone LLMs fail on several vision‑first tasks (p. 2), but cleaner ablations are needed. (3) Evaluation choices (best‑ vs last‑frame, pass@k) substantially affect reported performance; pass@10 is often much higher than pass@1 (Figs. 3–9, pp. 5–9). (4) Systematic weaknesses persist in metric geometry (depth/normals), symbolic structure (word search, equations), and contact‑rich physics (collisions, cloth, bottlenecks) (pp. 42–46). Video models are zero-shot lear…

Conclusions. The path from “promising” to “foundation” runs through control, physics, metrics, and disciplined evaluation—turning CoF‑style stepwise generation into reliable reasoning. Test‑time selection, verifier‑in‑the‑loop scoring, better control channels, and attribution‑aware ablations are immediate levers. Video models are zero-shot lear…

Key results (bulleted)

- Prompt sensitivity is large and measurable. In symmetry completion, changing only the wording moves pass@1 by 40–64 points, with corresponding shifts in average cell errors (Table 2 p. 40). Video models are zero-shot lear…

- Visual priors matter. A green background boosts segmentation mIoU (0.74 vs 0.66 best‑frame), plausibly due to “green‑screen” priors learned during training (Fig. 4 p. 6). Video models are zero-shot lear…

- Evaluation framing changes the story. The model often “keeps animating,” so best‑frame can overstate practical reliability vs last‑frame; pass@10 is consistently higher than pass@1 across tasks (pp. 5–7). Video models are zero-shot lear…

- Composition with an LLM rewriter complicates attribution. The system is treated as a black box, but the paper verifies that a standalone LLM cannot crack several image‑only tasks (p. 2). Video models are zero-shot lear…

- Failure patterns are coherent. Depth/normals (Fig. 62–63), force/trajectory following (Fig. 64), text‑exact puzzles (Figs. 67, 69), and contact‑rich physics (Figs. 72–77) remain weak points (pp. 42–46). Video models are zero-shot lear…

Expanded analysis and roadmap

A. Prompting is power—and confound

Textual instructions. On symmetry, ostensibly similar prompts vary from 8% to 68% pass@1 (random split) and 8% to 48% (shape split) with clear differences in average cell errors (Table 2 p. 40). The authors’ takeaways—remove ambiguity; specify what must not change; provide a motion outlet; add an explicit “completion” signal; control camera/speed—read like a visual prompt engineering playbook (pp. 40–41). Video models are zero-shot lear…

Visual context. A simple green background acts like a latent control knob for segmentation (Fig. 4 p. 6), highlighting that visual prompt design (backgrounds, lighting, perspective) can act as powerful priors—and should be controlled in evaluations. Video models are zero-shot lear…

Implication. Benchmarks should report prompt ablations (e.g., variants 1–10 in Table 2) alongside headline numbers to measure robustness to prompt drift. Video models are zero-shot lear…

B. Attribution: who solved the task?

The system uses a prompt rewriter upstream of the generator (p. 2). For reasoning‑heavy samples (e.g., toy Sudoku; Fig. 55 p. 29), the LLM may contribute planning. The authors partly address this by showing a standalone LLM cannot solve mazes/symmetry/navigation from images, but clean ablations—rewriter off, rewriter on with logs, generator‑only—would make future claims stronger. Video models are zero-shot lear…

C. Metrics: best‑frame, last‑frame, and pass@k

Because Veo continues animating beyond solution, best‑frame (upper bound) can be higher than last‑frame (practical scoring). Many curves improve substantially from k=1→10 attempts (Figs. 3–9, pp. 5–9). Actionable path: add verifier‑in‑the‑loop selection (choose the best candidate via a lightweight visual check), report fixed sampling budgets, and prefer last‑frame for deployment‑like evaluations. Video models are zero-shot lear…

D. Failure analysis → data/objective/interface gaps

- Metric 3D understanding. Poor depth and surface normals (Fig. 62–63 p. 42) suggest missing metric supervision or inconsistent 3D priors. Video models are zero-shot lear…

- Instruction adherence by overlay. The model is inconsistent in following force arrows or motion trajectories (Fig. 64 p. 42), calling for explicit control channels (e.g., conditioning on flow/constraints) rather than inferring them from pixels. Video models are zero-shot lear…

- Symbolic/structured content. Word search and equations trigger hallucinations (Figs. 67, 69, pp. 43–44). Mixed symbolic‑visual objectives or auxiliary OCR/math heads might help. Video models are zero-shot lear…

- Contact‑rich physics and planning. Collisions, breaking, bottlenecks, cloth, and constrained motion (Figs. 72–77, pp. 44–46) expose limits in physical commonsense and constraint satisfaction. Curricula emphasizing contact dynamics and verifiable outcomes could reduce such failures. Video models are zero-shot lear…

E. Where the evidence is strongest today

- Quantitative wins with simple prompts. Edges (Fig. 3 p. 5), segmentation (Fig. 4 p. 6), object extraction (Fig. 5 p. 6), and maze/symmetry (Figs. 7–8, pp. 7–8) already show zero‑shot competence, often with Veo 2→3 gaps underscoring rapid progress. Video models are zero-shot lear…

- Chain‑of‑frames as a reasoning substrate. The temporal unfolding seems to help on tasks where stepwise spatial updates are natural (Fig. 2 p. 3; Figs. 48–59, pp. 28–30). Video models are zero-shot lear…

F. Near‑term recommendations

- Standardize evaluation: always report last‑frame scores; fix sampling budgets; include prompt ablations; and release scoring scripts (as done in Sec. B; pp. 31–39). Video models are zero-shot lear…

- Improve controllability: add explicit force/trajectory conditioning and adherence penalties to address failures in Fig. 64 (p. 42). Video models are zero-shot lear…

- Ablate composition: turn the LLM rewriter off/on; log edits; compare against a generator‑only setting to clarify attribution (p. 2). Video models are zero-shot lear…

- Leverage test‑time compute: exploit pass@k headroom via self‑consistency/majority‑vote + visual verifiers (Figs. 3–9, p. 5–9). Note: on analogies, simple majority vote is not enough and can hurt (Fig. 61 p. 38), so task‑aware verifiers are needed. Video models are zero-shot lear…

- Physics & geometry curricula: targeted data for contact, fracture, cloth, bottlenecks, and metric 3D supervision to address failures in Figs. 62–77 (pp. 42–46). Video models are zero-shot lear…

G. Outlook

As costs fall (pp. 9–10) and controllability/reliability rise, the generalist advantages—single model maintenance, broad coverage, better long‑tail behavior—should mirror the LLM shift in NLP. The paper’s Table 1 shows that even a black‑box, prompt‑only protocol over 62 + 7 tasks already surfaces generalist behavior; the remaining work is to make that behavior dependable, auditable, and controllable. Video models are zero-shot lear…