Mastering

Ozone 9.1 Update Available

2025

Ozone 9.1 Update Available

Izotope released Ozone Version 9.1 the new version includes fixes and improvements, including support for macOS Catalina.

New features and enhancements

- Added ability to scrub (click and drag) to adjust text-based values in the EQ HUDs

- Added “Extra Curves” displays to the EQ

- Extended the range of the Gain control in Master Rebalance

Ozone 9.1 fixes

- Fixed LP/HP filter Slope value resetting to 6 dB/oct if value was changed using the inline edit field in the EQ HUD.

- Fixed incorrect Q value display for bands that are disabled by default in the All Bands view of the EQ.

- Fixed issue where double-clicking on an .ozn project file would open an empty Ozone app project instead of the selected project.

- Fixed missing ‘Sum to Mono’ button in the I/O panel of the Ozone 9 Imager component plug-in.

- Fixed incorrect module panel being shown when switching between tracks with different signal chain contents in the Ozone app.

- Fixed missing playhead indicator in the Reference panel.

- Fixed missing Initial State entry in the Ozone mothership plug-in undo history list.

- Fixed module panel remaining visible after the module was removed from the chain by stepping back through events in the history list.

- Fixed inconsistent behavior when selecting bypass events in the Ozone app history list.

- Fixed missing undo history events for module bypass and solo for any module that is not included in the factory default signal chain.

- Fixed missing undo history events for adding or removing bands in the Dynamics, Exciter, and Imager modules.

- Fixed incorrect latency reporting when Codec Preview is enabled.

- Fixed intermittent crash when writing Dynamic EQ plug-in automation.

- Fixed crash when global mothership presets were loaded in an Ozone component plug-in.

- Fixed intermittent crash in the Ozone application when bulk importing files of varying sample rates.

- Fixed intermittent crash that could occur after running Master Assistant several times in certain DAWs.

- Fixed crash opening non-44.1k Ozone app projects that contain plug-ins in the signal chain of any track.

- Fixed intermittent crash when loading Ozone app projects, sessions, or host presets with reference tracks of varying sample rates or file formats.

- Fixed crash/hang on playback when using Reference tracks within dual mono instances of Ozone in Logic.

- Fixed inaccurate output meter clipping indication in the Ozone app.

- Fixed custom install location for Tonal Balance Control 2 on Windows.

- Fixed inconsistent behavior when loading module presets in the mothership or app preset manager.

- Fixed inconsistent bandwidth value settings when loading some EQ module presets.

- Fixed track waveform display issues when resizing the Ozone application.

- Fixed delayed update to the composite curve after adjusting Frequency resolution in the EQ options.

- Fixed latency between the scrolling waveform and gain reduction trace.

- Fixed display of track waveform data when zoomed in to the sample level.

- Fixed incorrect default I/O meter type.

- Fixed labeling of Mid/Side mode in Low End Focus.

- Fixed layout and styling of the plug-in selection list in the Ozone application.

- Fixed alignment of the correlation trace center line in the Ozone 9 Imager.

- Fixed alignment of text elements in the Master Assistant panel.

- Fixed overlapping scale labels in the EQ and Match EQ.

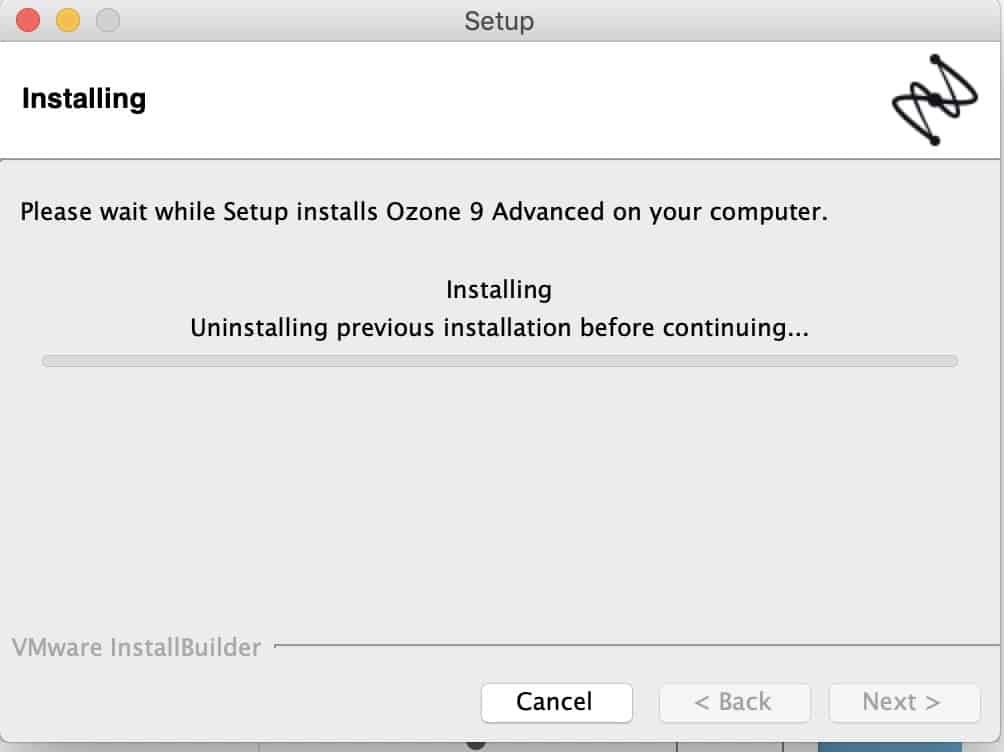

How to update

Click here to go to the download page. You will be asked to enter your iZotope account email.

Once you enter your account email and password, you’ll be taken to the download page. If you don’t have an account, simply create one and follow the steps below.

Mastering is the final stage in a recording’s creation and the most crucial step of the production process. It requires critical listening and subtle changes. It is essential that the client is involved in the process and provides honest feedback.

Critical Listening Is Key to Creating a Great Master

Leaders need to be able to listen critically. It involves the ability to listen without preconceived notions and give and receive constructive feedback. Distractions can hinder communication. It is important to stay relaxed and focused. When you are listening to a speaker, make sure to pay close attention to his/her words and body language. Pay attention to the tone and accent of the speaker’s voices, as well as the speaker’s facial expressions.

Listeners who are good at listening can organize information by asking questions, separating assumptions, and deducing background information. They can also connect concepts logically, which leads to a sound and productive conclusion. You will learn from others through critical listening and promote clear communication.

Critical listening skills are essential in public speaking events. These events are attended by people who focus on evaluating the speech. They are aware that the speaker may not be telling the truth about a subject. Therefore, they use context and facts to make informed decisions. Critical listeners also take notes.

Good listeners are able to empathize with the speaker. They develop the ability to understand the speaker’s perspective and convey their emotions and thoughts. This skill can help people become better problem-solvers and decision-makers. It also helps new managers in the workplace. It can help new managers and people who were promoted to become more effective leaders.

It’s an Art Form

Mastering music is a crucial step in creating a high-quality recording. In addition to ensuring the consistency of volume, it also helps to reduce unwanted noises and transients in the music. In other words, it is essential to making your music sound professional and successful on different platforms. It is best to master your music in a familiar room and use various tools, such as A/B testing, to identify any problem areas and ensure the best results possible.

Music can be a way to express oneself, and it can be an excellent tool for marketing. Many artists use modern technology to promote their work, such as music videos and Instagram posts. Mastering music is an art form that requires practice and knowledge of music theory as well as practical exercises.

Mastering involves many steps, including adjusting gain levels and panning tracks. Preparing for distribution is the final step. After mastering is completed, the songs are bounced to create a track or album that can be distributed.

Music mastering engineers have years of experience and are professionals. They go to school for this job and devote years of their lives to perfecting their craft. It is best to hire a professional mastering engineer to achieve the best results. The best mastering engineers have expert ears and extensive experience. They have access to high-quality audio equipment and well-treated rooms.

Mastering is a process that aims to maximize the volume and quality of a recording. A mastering process that is well executed can make a song louder, punchier, and enjoyable to listen too. A master engineer will analyze the dynamic range of a recording to determine the best way to compress it.

It’s a Process

Mastering is a technical process that enhances the sound quality of a recording and makes it more attractive to the listener. It helps to create a consistent flow throughout an album. It adapts the material for different media and distribution methods. During this process, the artist is working towards the final goal of creating a piece of music that will be able to be heard by the widest possible audience.

Mastering takes place after mixing. The stereo output is then given to the mastering engineer who works on the audio and makes minor adjustments. This process does not affect individual instruments, but the whole audio. Preparation is key before mastering a track. Even new mastering artists need to start out by following some simple steps in preparation.

Modern mastering makes the final mix sound good across multiple devices and streaming services. It also ensures that the volume levels of individual tracks match, which greatly improves the listening experience. Mastering requires many technical and creative steps that are required to create a master. The goal is to create an album which sounds great on different sound systems, and is properly encoded for various formats.

Mastering is crucial after mixing, as it ensures that individual songs and instruments sound consistent. Music that is not mastering can sound jumbled and cause a poor listening experience. Mastering ensures that songs and instruments sound their best. The mixer manipulates multiple audio tracks and stereo mixes during the mixing process to produce the best sound possible. The mixer also adjusts individual instruments to achieve a better balance.

It’s a Game that Involves Subtle, Small Changes

Mastering is an art form that entails making small, subtle changes to the audio of your music. It is important to use neutral ears and a fresh perspective when working on your mixes. The intensity of mixing over a few days, weeks, or even months will have an impact on your impartiality, so always try to hear your songs with fresh ears and eyes.

Mastering is essential to audio production because it ensures track consistency. Each track must sound as though it belongs on the same album. This process is crucial if you want your music to sound professional and do well on different platforms. It is a good idea for your mix to get a second opinion so you can spot problems in different parts.

Mastering is the final stage of recording after mixing. Mixing involves setting the gain level of each track, panning, and applying effects. Mastering, on the other hand, gives your mix its final polish. Mastering involves making small changes to the balance of individual elements, but is more difficult than mixing.

Modern mastering is about making your music sound great across all platforms, devices, streaming services, and devices. It also ensures that different tracks have the same volume levels, making listening to your music easier and more enjoyable. Mastering prepares your music for distribution through various tasks such as sample rate conversion and bit depth reduction. It also contains metadata that allows you share your music across multiple platforms.

Mastering adds the final touch to your recording and elevates it above other songs. Mastering your music is not the same as designing a beautiful car and not painting it.

It’s a Process that Requires Lots of Practice

Mastering is the final step in post-production before a record is ready for distribution. There are many techniques that can be used to make the recording sound great. They include equalization, compression, distortion, stereo imaging, and adhering to loudness standards. Mastering has a long history.

Many artists believe they can master their records. However, many home studios lack proper acoustics and other listening equipment. It is important to work with a mastering artist who has years of experience and training. It is also useful to remember the old saying, “fix it in your mix,” and how it applies when mastering.

Listening to your song on various listening devices is also important. This will give you an idea of what your listeners hear. You can experiment with different headphones by choosing the most popular for your listeners. Two examples of well-known headphones are the Beats by Dre headphones or Apple AirPods. Some listeners may also play music directly from their phones’ speakers.

Equalization is a key step in mastering your song. Since human hearing can only hear between 20 Hz and 20 kHz, equalization is essential for ensuring the sound is as harmonious as possible. Subtractive EQ cuts the loudest frequencies and boosts the rest of the song.

Another important step in mastering is adjusting the level of the mix. This involves reducing unwanted spikes in volume or adding limiter parameters. Finally, mastering is important for transferring the finished product to digital, or vinyl.

Although mixing and mastering are similar processes, there are some important differences. Mastering is usually done by a professional musician and involves a variety of tools and techniques. Mastering involves normalizing the volume of each song and blending different frequency ranges. Mastering is essential for streaming and radio because it adds metadata that allows songs to display the appropriate album artwork, song title and release date.

Mixing

Mastering is the art of blending sounds from multiple audio elements into an album. It is a process that requires a high level of expertise, dedication, and patience. The process is very time consuming, especially for beginners. If you are unable to devote time to mastering, you can hire a professional to do it for you.

The mixing process involves the editing of the song’s audio tracks and adjusting their pitch and time. Mixing can also include tweaking track levels or applying automation. The goal is to make sure the mix sounds great on a variety of playback systems. It is important to test the mix on earbuds or headphones to make sure it sounds good. You can also try it on an alternate set of speakers such as in your car.

While mixing and mastering share some basic tasks, there are a few crucial differences. While mixing involves heavy-handed adjustments to individual tracks, mastering focuses on subtle broad strokes. Both processes aim to enhance the artist’s vision and convey the song’s emotional intent. In addition, mastering will typically improve the overall sound quality.

The mixing engineer labels each track, organizes them into similar groups, and normalizes their volume levels. The engineer then runs each individual track through an equalizer filter to eliminate unwanted frequencies and increase audio clarity. The same process is followed by online music mastering services, although they may use other processing tools.

When it comes to music production, mixing and mastering are essential to achieving a quality finished product. The process of mixing and mastering involves making adjustments to the song’s original sound and avoiding unintentional audio issues that may arise later. You may have to adjust the bitrate, bit depth, and sample rate in the mastering stage.

Mixing is an ongoing process; it takes several versions before the mix is finished. You will need to make small changes until everything is just right. The mix may need to be tweaked a few times before it reaches its final quality.

Mastering Is a Process

The process of mastering involves enhancing the mix so it sounds as good as possible. Mastering should not be about fixing bad combinations but about improving the good ones. A mastering engineer might reduce the dynamic range of a song, which can result in a tighter sound.

The mixing stage occurs after the song’s different parts have been recorded. Mixer blends them to create a stereo audio file. The final stereo audio file is then available to the mastering engineer. The final step before the track can be released is mastering.

The mastering engineer works with a finished stereo track. He does not have access the music, unlike the mixing engineer. He works with the stereo tracks. He can’t fix mixing problems, but he can make adjustments that make the song sound better. The mastering engineer also adds metadata, which allows radios, iTunes, and Spotify to display the song name and album artwork.

After mixing, the engineer will apply any effects or EQs to the tracks. After he’s finished, the track will bounce as a single track or as stems. Finally, the mastering engineer will make it sound as good as possible. If the mastering engineer isn’t satisfied with the mix, he will re-cut the tracks to improve their fit.

Mastering is the last stage of the recording process. The mastering engineer will arrange the songs in the order they will be placed on an album. Mastering engineers also decide whether to leave gaps between songs and to adjust the volume levels. They aim to improve the sound quality of each song while maintaining a consistent overall sound.

Mastering is often a different process than mixing. Mixing involves multiple tracks, while mastering is focused on one stereo file. This ensures the individual components of the song come together. Mastering is a final quality control, and the process can be quite expensive.

Time Involved in Each Process

The time involved in mixing and mastering a track can range from a few hours to a few days, depending on the complexity and size of the project. The actual process can last anywhere from half an hour to two hours, depending on the skill level of the audio engineer and the quality of the track.

Mixing and mastering a song takes time. This is dependent on the size of the project, the complexity of the song, and the artist. For example, rock music is relatively straightforward, whereas pop and hip hop are more complicated. The type of voice and style of the music also affects how long it takes to mix. Rapping, for example, requires a different mix method than melodic singing.

It is important to perform these two processes separately in order to achieve the best quality sound in the final product. Mixing and mastering involve balancing and polishing the audio tracks so they fit together well. If the music is mixed poorly, the final product will be a poor master.

The process of mastering begins with a reference track. It is important to compare the final product to the reference track as this will help determine whether any changes are necessary. Although they shouldn’t be exact copies of the final product. However, reference tracks can help you to assess how well the mix is done.

There are many stages involved in the process of music production, and each stage of the process is important. Some artists prefer to have one person manage the entire process while others use multiple professionals. Mastering requires a high level of skill and knowledge. A mastering engineer is able to create high-quality products.

The Effects of Mastering Song

In audio, mastering is a crucial step to ensure the final quality of a song. Mastering goes beyond just adjusting levels or panning tracks. It improves the track’s overall quality by increasing its coherence, consistency, and harmonic quality. To achieve this, engineers listen to the song first and then make adjustments that enhance the sound. They interpret the song to reflect the mood and feel of the song.

Another tool used in mastering is the compressor. This device can increase the overall loudness of a recording without introducing distortion. The louder signal can mask noise from consumer-grade amplifiers by reducing its dynamic range. It can also highlight the quieter parts of the recording. Compression can also alter the timbre or envelope of a signal, which can affect the overall sound.

Mastering is the final step in the post-production process before the song is released for distribution. Mastering engineers will balance the sonic elements and optimize playback. This final recording is referred to as a “master recording” and is used to distribute the song on physical media.

Mastering also helps to make the sound more cohesive throughout the record. It is essential for achieving the final sound of the music. Its objective is to ensure that the original emotional intention of the artist can be heard. This is achieved through the use effects, panning, reverb.

While mixing and mastering are different processes, they are essential to the overall sound of a song. The purpose of both is to create a good balance between individual elements and create a polished, cohesive whole. Without mixing, a song may sound unbalanced or a mess. Mastering is essential to achieve a professional result and guarantees the quality of your music.

It was the late 1950s, and people were starting to take notice of something strange happening in the world of music. Record companies were starting to compete with each other to make their records louder and louder. This phenomenon became known as the Loudness War. In this blog post, we will explore the history of the Loudness War, and why they are such a big issue in the world of audio engineering.

Anyone who has ever mixed a song knows that the most important tool is the human ear. However, the ear can be easily fooled, which is why it is essential to have a reliable reference metering system.

This system can help to ensure that the final mix is compliant with the various parameters for the various media streaming platforms that are available today. In addition, this system can help to prevent clipping and other audio issues that can impact the quality of the final mix. While there are many different metering systems on the market today, it is important to choose one that is durable and easy to use.

With so many options to choose from, it can be difficult to know where to start. However, by taking the time to research the different options, you can be sure to find a system that will meet your needs and help you produce the best possible mix.

The Loudness War

The Loudness War have their roots in the 1950s, when people started to notice that records were getting louder and louder. This was due to a competition between record companies to make their records sound louder than their competitors. This was because people tended to play the louder records in jukeboxes more often. This led to an arms race of sorts, with companies constantly trying to one-up each other in the loudness department.

This arms race continued into the 1960s and 1970s, with companies using ever-more sophisticated methods to make their records sound louder. This often led to music sounding distorted and unpleasant, as the waveforms of the sounds were being artificially clipped in order to make them louder.

There’s a physical limit to how loud a vinyl record can be cut without making it unplayable, so even the loudest-cut records managed to retain quite reasonable dynamics.

The advent of the compact disc in the 1980s allowed for even greater levels of volume, as well as Extended Playtimes that were sometimes twice as long as those on vinyl. But there are drawbacks to this increased Loudness War volume levels.

First, the overall level of noise in society has increased, which can lead to hearing damage. Second, the increase in loudness has resulted in a decrease in the dynamic range of music, which can make it sound less natural and more fatiguing. Third, the increase in loudness has made it more difficult for music to compete with other noise sources in our lives, such as traffic or conversation. As a result, we may find ourselves turning down the volume of our music, which defeats the purpose of listening to it in the first place.

In the 1980s, digital audio became more common, and this allowed for even more Loudness War-style shenanigans. With digital audio, it is possible to make a recording sound as loud as you want without any distortion. This led to many records being mastered at extremely high levels, which often made them sound bad.

The Loudness War are still going on today, and they show no signs of stopping. With the advent of streaming services like Spotify, there is now more pressure than ever to make records sound as loud as possible. This is because these services use what is known as a “loudness normalization” algorithm, which levels out the loudness of all tracks so that they are all at the same volume. This means that if your track is not as loud as the others, it will sound quieter and less impactful.

So why do the Loudness War continue to this day? There are a few reasons.

First of all, there is a lot of pressure on artists and record labels to make their music sound as loud as possible. This is because people tend to prefer music that is louder and because streaming services use loudness normalization.

Secondly, it can be very difficult to make a record sound both loud and good. Often, the two are mutually exclusive.

Finally, there is a lot of misinformation out there about how to make a record sound good. Many people believe that the only way to make a record sound good is to make it as loud as possible when in reality, this is often not the case.

The Loudness War is a big problem in the world of audio engineering, and they show no signs of stopping anytime soon. Hopefully, with more education and awareness, we can start to move away from the loudness race and towards a more balanced approach to audio mastering.

Loudness Management

Imagine you’re settling in for a movie night. You’ve got your favorite snacks and drinks, you’re cozy on the couch, and you’ve finally found the perfect film. The only thing left to do is press play…and then scramble to find the remote when the opening credits blast out of your speakers at an ear-splitting volume. We’ve all been there, and it’s one of the most frustrating things about watching TV.

Thankfully, there’s a solution: loudness management. Loudness management is the process of playing all programs at a consistent loudness, relative to each other. That way, once you set your volume to a comfortable level, you should never have to change it, even as you switch from a movie to a documentary, to a live concert.

For example, Netflix specifically aims to play all dialogue at the same level, so you can always understand what’s being said without having to adjust the volume. As a result, loudness management ensures that you can sit back, relax, and enjoy your favorite films and TV shows without any unwanted interruptions.

Netflix is constantly working to ensure that its content is of the highest quality. Part of this involves ensuring that all dialogue is easy to hear and understand. To do this, they measure the loudness of all content before encoding it.

This helps them to adjust the level of each piece of dialogue so that it is audible without being disruptive. The use of anchor-based measurement ensures that all dialogue is consistent, regardless of the type of show or movie.

This allows viewers to focus on the story, rather than trying to decipher what is being said. As a result, Netflix’s commitment to quality audio helps to create a more enjoyable viewing experience for their customers.

Dynamic Range Control

The goal of dynamic range control is to optimize the dynamic range of a program to provide the best listening experience on any device, in any environment. Dynamic range is the difference between the loudest and softest sounds that a system can reproduce. It is often measured in decibels (dB). Most humans can hear a sound with a dynamic range of about 140 dB. older systems had a limited dynamic range, often around 40 dB.

This meant that very quiet sounds were lost, and very loud sounds were distorted. Newer systems have a much greater dynamic range, often greater than 90 dB. This means that very quiet sounds can be reproduced without distortion, and very loud sounds can be reproduced without clipping.

Dynamic range control adjusts the levels of all sounds so that they are within the system’s dynamic range. It makes sure that very quiet sounds are audible, and very loud sounds are not distorted. It also adjusts the levels of all sounds so that they are evenly balanced. This provides the best listening experience on any device, in any environment.

One of the challenges of modern television is that the dynamic range of many programs often exceeds the dynamic range of the viewer’s device and environment. This can lead to a number of problems, including the need to constantly adjust the volume and difficulty understanding dialogue.

In these cases, dynamic range compression (DRC) can be used to reduce the dynamic range of the content to a more manageable level. While DRC does have some drawbacks, such as reducing the overall loudness of the soundtrack, it can be a useful tool for ensuring that viewers are able to hear all of the details in a program’s audio.

When you’re watching a movie on Netflix, the goal is to provide you with the best possible experience. Part of that experience is delivering the audio in a way that sounds great, regardless of what device you’re using.

To do that, Netflix employs a sophisticated algorithm that reduces dynamic range in a sonically pleasing way. This ensures that dialogue levels remain unchanged, and only a gentle adjustment is made when sounds are excessively loud or soft for the listening conditions. As a result, you can be confident that you’re getting the best possible sound quality when watching your favorite movies and TV shows on Netflix.

All-Analogue Times

In the all-analog time, mixing consoles, tape machines, vinyl discs, and other consumer replay media all employed a nominal ‘reference level’ — essentially the average program signal level. Above this reference level, an unmetered space called ‘headroom’ was able to accommodate musical peaks without clipping.

This arrangement allowed different materials to be recorded and played with similar average loudness levels, whilst retaining the ability to accommodate musical dynamics too. The result was a more natural and musically satisfying sound that gave listeners a sense of space and air around the instruments. In the digital age, however, things are very different.

With digital audio, it is all too easy to add gain until the converter clips, resulting in a harsh, distorted sound. Moreover, many modern recordings are deliberately compressed and limited to maximize loudness at the expense of dynamics. While this may make them sound impressive at first, prolonged listening quickly becomes fatiguing.

Thankfully, there are still a few recording engineers who understand the value of headroom and dynamic range, and who are able to produce recordings that have all the power and punch of digital audio without sacrificing musicality. These recordings are a joy to listen to and provide a welcome respite from the relentless barrage of sonic

Moving to Digital Producing & Mixing

From the perspective of sound quality, digital audio always had one big advantage over analog – it was (and is) immune to interference from electrical and magnetic fields. This meant that in theory at least, digital audio systems could offer much higher levels of performance than their analog counterparts.

However, in practice, early digital audio systems were often found wanting. One major problem was that the converters used to convert the analog signal into digital form were not as good as they should have been. This meant that the signal levels had to be kept relatively low in order to avoid distortion. As a result, the ‘reference level’ effectively became the clipping level, and the notion of headroom fell by the wayside, becoming this war’s first casualty!

Considerations When Mixing In Big Rooms

There are a few things to consider when choosing a monitor setup for a recording studio. One is the type of layout, either ITU or cinema. The ITU layout is typically used in smaller studios and more closely reflects what the consumer’s system will be like.

This can be important for things like panning movements, as the imaging will be different between the two layouts. Another thing to consider is the size of the room and the number of speakers needed. For smaller rooms, near-field monitors may be sufficient, but for larger rooms, floor-standing speakers may be necessary in order to get the full range of sound.

Finally, it is also important to think about budget when choosing monitors, as there can be a wide range in prices. Ultimately, there are a lot of factors to consider when choosing monitors for a recording studio, but with a little research, it is possible to find the perfect setup for any situation.

Since mixes should be monitored through a near-field system, it is important to also consider other ways to check for intelligibility. One way to do this is by having something that mimics a TV speaker.

This can be very useful in checking for clarity and understanding. However, it is important to note that there is no one-size-fits-all solution. What works for one mix may not work for another. I still remember when I watch (or listened) to a new mix on youtube on my TV, and it was not good. It did sound great on my iMac so.

As such, it is important to experiment and find what works best for you. But ultimately, by monitoring your mixes through a near-field system and also considering other options, you can ensure that your mixes are intelligible and sound their best.

Authenticity – Acceptable Or Harmful

In filmmaking, the sound is often one of the most overlooked aspects. However, it can be just as important as visual elements in setting the tone and creating an immersive experience. This is why many filmmakers choose to use sound design to enhance their films. Sound design is the process of creating or selecting sounds that are used in a film. These sounds can be anything from dialogue and music to Foley effects and ambiance.

By carefully crafting the soundscape of a film, filmmakers can control the emotional impact of their story and create a more realistic or otherworldly atmosphere. Of course, restricting the dynamics of sound can be essential in order to achieve the desired effect.

Realism is not always possible, and sometimes a more abstract or otherworldly approach is necessary in order to capture the essence of a story. Ultimately, it is up to each individual filmmaker to decide how best to use sound in their own films.

TV shows are often mixed with natural dynamics in the dialog, which can make it harder to hear and understand. How you choose to reduce the dialog dynamic range is up to you. One option is to lower the overall volume of the TV show. This will help to keep the dialog from becoming overwhelming. Another option is to use a soundbar or other audio device to help balance the sound.

By careful placement of these devices, you can help keep the dialog clear and easy to understand. Whichever option you choose, be sure to test it out before watching your favorite TV show. Otherwise, you may constantly adjust the volume and miss out on key parts of the story.

I believe that mixing TV drama is an essential part of the creative process. By mixing different elements of the show, such as dialog, music, and sound effects, the creative team can craft a unique and engaging experience for viewers.

By carefully balancing these elements, the team can create a soundtrack that amplifies the emotion of the scene and helps to draw viewers into the story. In addition, mixing allows the team to control the pacing of the show, ensuring that viewers are not left feeling confused or overwhelmed.

Ultimately, mixing is an essential tool for creating a successful TV drama. Without it, the creative vision of the team would be lost in translation.

The Broadcaster

The word broadcaster is most commonly used to refer to television and radio companies that transmit programs through the airwaves. However, in the age of streaming services, the term has taken on a new meaning.

Broadcasters like Netflix and Amazon Prime don’t have any transmitters, but they are effective ‘the one’ delivering to ‘the many’ in the standard definition of a broadcaster.

Unlike traditional broadcasters, they are not regulated by governments and they are not beholden to advertisers. This allows them to take risks and innovate in a way that traditional broadcasters cannot. As a result, they have changed the landscape of television and film, and they are redefining what it means to be a broadcaster.

As we all know, there’s nothing worse than getting settled in for a good show only to have to scramble for the remote when the volume gets too loud. Or worse, having to constantly adjust the volume because it keeps fluctuating.

Netflix understands that the last thing you want is for your viewing experience to be interrupted, which is why they actively work to ensure that their content sounds great no matter what kind of environment you’re watching in.

So whether you’re curled up on the couch with your favorite blanket or out and about with your earbuds, you can rest assured that your show will sound just the way it should. And isn’t that the way it should be? After all, the best way to experience your favorite show is without any interruptions.

Netflix Loudness

As any audio engineer knows, one of the most important aspects of a good mix is dynamics. A well-crafted mix will have a wide range of loud and soft sounds, giving the listener an engaging and immersive experience.

Unfortunately, many streaming services compress audio mixes, resulting in a muddy and flat sound. This is not the case with Netflix. The company is committed to preserving the creative intent of its audio mixes, and as such, they do not compress or limit the dynamic range of their audio tracks.

This may result in some content sounding quieter than others, but it ultimately provides a better listening experience for customers. Netflix also normalizes all audio deliveries to ensure that all tracks play at the same level on service. This may cause some audio to be raised or lowered in the overall mix level, but it ensures consistent playback between titles.

As a result, Netflix is able to provide its customers with high-quality audio that sounds true to the original intent of the mix.

If you are delivering your mix or master to shows that are aired on Netflix the game has changed. There are several important audio quality prerequisites for mix facilities that must be met in order to produce high-quality audio content.

Important: For all titles, 5.1 audio is required and 2.0 is optional. In addition, mono audio is only acceptable if the program’s original source is mono and no stereo or 5.1 mix exists. Mono audio must be duplicated on channels 1 & 2 and delivered as 2-channel in order to be properly compatible with all devices. By following these simple guidelines, everyone can enjoy the highest quality audio experience possible.

First, the average loudness must be -27 LKFS +/- 2 LU dialog-gated. This ensures that the audio is at a consistent volume and is easy to understand. Additionally, peaks must not exceed -2db True Peak. This prevents any sudden, jarring sound effects from damaging the audio quality.

Finally, audio should be measured over the full program according to ITU-R BS.1770-1 guideline. This ensures that the audio is accurately reproduced and that there are no issues with dropouts or other audio artifacts. By meeting these prerequisites, mixed facilities can produce high-quality audio content that sounds great and is easy to understand.

Any audio recording that is going to be used in sync with picture must be recorded at the native project frame rate. This is to ensure that there is no lag or delay between the audio and the visuals.

All audio deliverables should therefore match the camera and picture post-production frame rate. In addition, all audio must be synced to the final IMF/Prores picture delivery. Alternate/Dubbed Language and Audio Description Mixes should not contain an additional leader. Printmasters, M&Es, and stems may include standard 8-second Academy leader and 2-pops. By following these guidelines, you can be sure that your audio will be in sync with the picture and will not cause any lag or delays.

The following loudness range (LRA) values will play best on the service:

- 5.1 program LRA between 4 and 18 LU

- 2.0 program LRA between 4 and 18 LU

- Dialog LRA of 10 LU or less

When near field 5.1 mixes measure at less than 15% dialog, program-gated measurement will be used instead (-24db LKFS +/- 2 LU – ITU BS 1770-3)

For LoRo fold downs or down mixes, ITU-R BS.775-1 – 5.1 to Stereo with LFE muted is the international standard.

Mastering for Streaming Services – Guidelines & Recommendations

As the way we consume music continues to evolve, it’s important to make sure that your music is sounding its best on all platforms. That’s why we’ve put together a list of guidelines and recommendations for mastering your music for streaming services.

When preparing your master for streaming services, there are a few things to keep in mind:

- The final mix should be stereo and should not contain any panning information.

- All audio should be properly normalized.

- The audio should be free of any clipping or distortion.

- The audio should have a consistent loudness level throughout.

- The audio should be encoded using a lossless codec such as FLAC or ALAC.

By following these guidelines, you can be sure that your music will sound its best on all streaming platforms.

Conclusion

The problem with The Loudness War is that it can lead to audio that is artificially boosted in level, which can cause distortion and other artifacts. Additionally, when music is artificially boosted in level, it can be difficult for listeners to hear the quieter moments of the music. To reduce the impact of the loudness war, producers and engineers can avoid boosting levels unnecessarily. Listeners can also adjust their playback levels so that they are not unduly influenced by loudness levels. Finally, broadcasters and streaming services can adopt Loudness Normalization standards such as EBU R128 or ITU-R BS.1770 to ensure that all content is played back at a consistent level. By taking these measures, we can help to reduce the impact of the loudness war and improve the overall quality of audio.

So there you have it – everything you need to know about The Loudness War and how to avoid them when mastering your music for streaming services.

The Loudness War FAQ – Everything You Need to Know About The Loudness War

Everything You Need to Know About the Loudness War in our FAQ.

What is the loudness war?

The loudness war refers to the practice of competing to make one record sound louder in relation to others. This long-standing practice is usually claimed to be the consequence of an observation made in the 1950s that people tended to play the louder-cut records in jukeboxes more often.

Why is the loudness war a problem?

The loudness war is a problem because they can lead to audio that is artificially boosted in level, which can cause distortion and other artifacts. Additionally, when music is artificially boosted in level, it can be difficult for listeners to hear the quieter moments of the music.

What can be done to reduce the impact of the loudness war?

There are several things that can be done to reduce the impact of the loudness war. First, producers and engineers can avoid boosting levels unnecessarily. Second, listeners can adjust their playback levels so that they are not unduly influenced by loudness levels. Finally, broadcasters and streaming services can adopt Loudness Normalization standards such as EBU R128 or ITU-R BS.1770 to ensure that all content is played back at a consistent level.

What are some Loudness Normalization standards?

Some Loudness Normalization standards include EBU R128 and ITU-R BS.1770. These standards ensure that all content is played back at a consistent level, which can help to reduce the impact of the loudness war.

Does Netflix normalize audio?

Yes, Netflix normalizes audio using the EBU R128 and ITU-R BS.1770 standards. This ensures that all content is played back at a consistent level, which can help to reduce the impact of the loudness war.

What is the difference between LKFS and LUFS?

Despite the many names, LF KS and LUFS are essentially the same. Both phrases designate similar events, and like LKFS, one unit of LUFS is equivalent to one dB. The loudness target level in accordance with any broadcast standard could be e.g. -24 LKFS or -23 LUFS depending on whether it is used for LKFS/LUFS or another format.

Is Netflix audio compressed?

Yes, this technology enables Netflix to provide the second component: perceptually transparent compression. To put it another way, Netflix can now deliver considerably higher overall bitrates, resulting in audio that sounds identical to the original master.

How do you get 5.1 on Netflix?

Select “audio and subtitles” from the menu at the top of the screen. Choose audio in this menu, then change to 5.1 surround sound. If your AV receiver supports it, you may also select surround sound instead. On most devices, this should automatically change the Netflix app’s setting.

What is audio LKFS?

LKFS (Loudness, K-weighted, relative to full scale) is a measure of loudness that attempts to take into account the way our ears perceive sound. It is often used as a measure of audio levels in broadcast and online video.

What is the best audio format for Netflix?

The best audio format for Netflix is Dolby Atmos. Dolby Atmos creates an immersive soundscape that envelops you in the action on screen. It’s the next best thing to being there.

How do I get better audio quality on Netflix?

There are several things you can do to improve the audio quality on Netflix:

– Use a high-speed internet connection (at least 25 Mbps).

– Use an HDMI cable to connect your TV or streaming player to your sound system.

– If you’re using a streaming player, make sure it’s connected directly to your TV.

– Check your audio settings in the Netflix app. Make sure Dolby Atmos is turned on and that you’re not using Surround Sound mode.

What is EBU R128?

EBU R128 is a standard for loudness normalization that was developed by the European Broadcasting Union. It is used by many broadcasters and online video platforms, including Netflix, to ensure that all content is played back at a consistent level.

What is ITU-R BS.1770?

ITU-R BS.1770 is a standard for loudness normalization that was developed by the International Telecommunication Union. It is used by many broadcasters and online video platforms, including Netflix, to ensure that all content is played back at a consistent level.

What is audio compression?

Audio compression is a process of reducing the dynamic range of an audio signal. This can be done in order to make the signal easier to store or transmit, or to reduce the amount of data required to represent it. Compression can also be used as a creative tool to change the sound of an audio signal.

Is Dolby Atmos better than Dolby Surround?

Yes, Dolby Atmos creates an immersive soundscape that envelops you in the action on screen. It’s the next best thing to being there. Dolby Surround is a standard for surround sound that was developed by Dolby Laboratories. It uses a matrix encoding scheme to encode multiple channels of audio into a single stereo signal.

What is the difference between lossy and lossless audio?

Lossy audio compression codecs reduce the size of an audio file by discarding some of the information in the original signal. This results in a lower quality signal, but one that requires less storage space or bandwidth. Lossless audio compression codecs preserve all of the information in an audio signal, but still result in a smaller file size than the original.

What is perceptual transparency?

Perceptual transparency is the ability of an audio codec to encode an audio signal without introducing any audible artifacts. In other words, the encoded signal should sound identical to the original.

What is audio bitrate?

The audio bitrate is the number of bits per second (bps) that are used to represent an audio signal. The higher the bitrate, the better the quality of the sound. However, higher bitrates also require more storage space or bandwidth.

What is FLAC?

FLAC (Free Lossless Audio Codec) is a lossless audio compression codec that preserves all of the information in an audio signal. FLAC files are typically much larger than files encoded with lossy codecs, but they provide excellent sound quality.

How can I improve the audio quality of my music?

There are several things you can do to improve the audio quality of your music:

– Use a high-speed internet connection (at least 25 Mbps).

– Use an HDMI cable to connect your TV or streaming player to your sound system.

– If you’re using a streaming player, make sure it’s connected directly to your TV.

– Check your audio settings in the Netflix app. Make sure Dolby Atmos is turned on and that you’re not using Surround Sound mode.

What is loudness normalization?

Loudness normalization is the process of adjusting the volume of an audio signal to make it sound consistent with other signals. This can be done in order to avoid having too much variation between different pieces of content, or to make sure that all content is audible. Loudness normalization standards, such as EBU R128 and ITU-R BS.1770, are used by many broadcasters and online video platforms to ensure that all content is played back at a consistent level.

What is perceptual loudness?

Perceptual loudness is the subjective experience of loudness, which can vary depending on the sound pressure level, frequency response, and other factors.

What is A-weighting?

A-weighting is a frequency weighting that is used to measure the perceived loudness of sound. A-weighted decibels (dBA) are a unit of measurement that take into account the way our ears perceive sound at different frequencies.

What is true peak level?

True peak level is the highest amplitude of a signal, including any transients or peaks that may be present. True peak levels can exceed the nominal level (the “average” or “rms” level) of a signal, and as such, they are a more accurate measure of the loudness of a signal.

What is dithering?

Dithering is the process of adding noise to an audio signal in order to improve the quality of the sound. Dithering can help to reduce quantization noise, which is a form of distortion that can occur when digital audio signals are converted from one format to another.

What is headroom?

Headroom is the amount of space between the peak level of an audio signal and the point at which clipping (distortion) occurs. Headroom allows for transients (sudden changes in volume) in an audio signal without causing clipping.

What is analog-to-digital conversion?

Analog-to-digital conversion is the process of converting an analog signal (such as a vinyl record or tape) into a digital format (such as a CD or MP).

Why are loudness war still happening?

Even though the Loudness War has been going on for over 70 years, it’s still happening today. The reason for this is that people tend to like music that is louder than average. This preference for loudness can be traced back to the early days of radio, when people would turn up the volume in order to hear faint signals. Over time, this preference has become ingrained in our culture, and now many people expect music to be loud.

Which is louder LUFS or LUFS?

LUFS is louder than LUFS. While this is a reasonably precise method of measuring loudness, using LUFS as the standard loudness units is generally preferable since it considers the perceived loudness of music as well as the overall audio reading.

-

Vetted2 weeks ago

Vetted2 weeks ago15 Best Concrete Crack Fillers for a Smooth and Durable Finish

-

Vetted3 weeks ago

Vetted3 weeks ago15 Best Party Games for Adults to Take Your Gatherings to the Next Level

-

Vetted1 week ago

Vetted1 week ago15 Best Insecticides to Keep Your Home Bug-Free and Safe

-

Vetted2 weeks ago

Vetted2 weeks ago15 Best Car Air Fresheners to Keep Your Ride Smelling Fresh and Clean

-

Vetted4 days ago

Vetted4 days ago15 Best Soldering Irons for Your DIY Projects – Top Picks and Reviews

-

Vetted2 weeks ago

Vetted2 weeks ago15 Best Drywall Anchors for Secure and Hassle-Free Wall Mounting

-

Vetted3 weeks ago

Vetted3 weeks ago15 Best Concrete Cleaners for Sparkling Driveways and Patios – Tried and Tested

-

Vetted1 week ago

Vetted1 week ago15 Best Driveway Sealers to Protect Your Asphalt or Concrete Surface