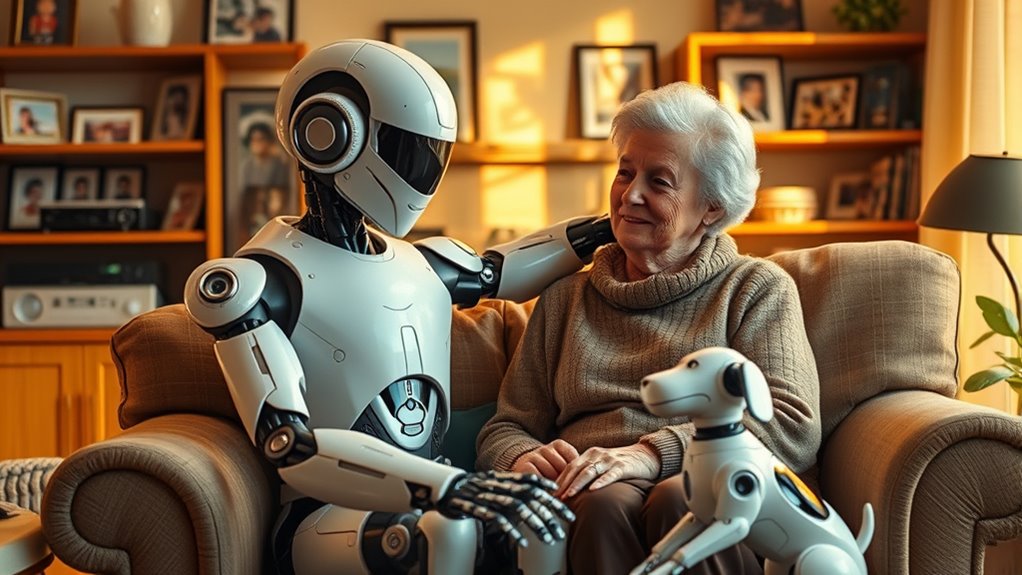

When machines become family, ethical questions emerge about trusting AI with our feelings. As you rely more on emotional AI, you might question whether these interactions are genuine or just programmed responses. It blurs the line between authentic human connection and synthetic support, raising concerns about authenticity and dehumanization. If you want to understand how this impacts genuine relationships and what’s at stake ethically, exploring further can give you clearer insights.

Key Takeaways

- Emotional outsourcing to machines raises questions about authenticity and genuine human connection.

- Dependence on AI for emotional needs may diminish the importance of real relationships and empathy.

- Ethical concerns include potential manipulation, exploitation, and loss of privacy in AI-driven emotional support.

- Society must consider the impact of replacing or supplementing human care with artificial interactions.

- Balancing technological benefits with the preservation of authentic emotional experiences is crucial.

As technology advances, more people turn to emotional outsourcing—seeking safety, love, and approval from external sources like AI systems or others—especially when authentic connections are lacking. You might find yourself relying on AI-powered chatbots or virtual companions to fill emotional gaps that real relationships can’t always meet. These systems use advanced natural language processing and sentiment analysis to detect your feelings and respond in ways that seem empathetic. It’s easy to see how this can feel comforting, especially when human connections are scarce or difficult to maintain. Companies have capitalized on this trend, offering increasingly sophisticated emotional AI that can serve as confidants, romantic partners, or support systems, 24/7.

You may appreciate the instant availability and consistency AI provides—no more waiting for a friend or therapist. Outsourcing emotional labor, like writing sympathy messages or managing grief, relieves you of some mental burden. For businesses, this means cost savings, efficiency, and the ability to deliver uniform responses that seem caring and understanding. These benefits seem appealing, especially when society encourages external validation over cultivating self-trust. The normalization of seeking comfort from machines can reinforce the idea that your emotional needs are best met through external, automated sources. Additionally, the development of home theatre projectors and other entertainment technology has made it easier to immerse oneself in digital worlds, further diversifying the sources of emotional comfort.

AI’s instant support and consistent responses ease emotional burdens, but may reinforce reliance on external validation over self-trust.

But beneath this convenience lies a critical ethical debate. Relying on AI for emotional support questions the authenticity of your interactions. If the responses are preprogrammed or formulaic, do they truly reflect genuine empathy? There’s a risk that you might begin to prefer machine responses over human ones, further devaluing real relationships. Additionally, replacing human emotional labor with AI can contribute to dehumanization—reducing meaningful, shared human experiences to transactions. Companies may also exploit emotional AI to influence your behavior, raising concerns about manipulation and consent.

In the end, outsourcing your emotional needs to machines offers immediate relief and accessibility, but it also prompts important questions about authenticity, human connection, and the societal impact of replacing genuine empathy with artificial responses. As you navigate this evolving landscape, it’s essential to weigh whether these digital interactions truly serve your well-being or if they come at the cost of deeper, authentic relationships.

Frequently Asked Questions

Can Emotional Outsourcing Replace Human Relationships Entirely?

No, emotional outsourcing can’t fully replace human relationships. While AI companions can offer comfort and companionship, they lack genuine emotional intelligence, empathy, and the ability to grow through challenges. You need real human connections for love, accountability, and personal development. Relying solely on AI risks emotional stunting and dependence, so it’s crucial to maintain authentic relationships that foster trust, understanding, and growth.

What Are the Long-Term Psychological Effects of Emotional Outsourcing?

Emotional outsourcing can leave you feeling like a ship lost at sea, with long-term effects that weigh heavily on your mind. Over time, you may experience emotional exhaustion, burnout, and a sense of detachment that dims your inner light. Trust issues and psychological strain can deepen, making it harder to connect authentically. Without proper support, these effects can erode your mental well-being, leaving you emotionally drained and less resilient against life’s storms.

How Do Cultural Differences Impact Perceptions of Emotional Outsourcing?

You’ll find that cultural differences shape how you perceive emotional outsourcing. In Western cultures, you’re more likely to see machines as expressive partners, valuing open emotional sharing. In contrast, Eastern cultures may view emotional outsourcing as intrusive, favoring restraint and emotional regulation. Your cultural norms influence whether you embrace or resist using machines for emotional support, depending on your values around expression, harmony, and social roles.

Are There Legal Protections for Users of Emotional AI Companions?

Yes, there are legal protections for users of emotional AI companions. New laws, like New York’s, require operators to implement safety measures, disclose AI’s non-human status, and monitor for issues like suicidal ideation. Some states mandate mental health oversight and clear protocols. However, enforcement mainly comes from government agencies, not individuals, and gaps exist around emotional dependency and manipulation risks, so protections are still evolving.

How Does Emotional Outsourcing Affect Societal Notions of Authenticity?

Have you ever wondered if societal expectations shape what we consider authentic? Emotional outsourcing shifts your focus from your true feelings to external validation, leading you to mask your authentic self. This practice reinforces social norms that prioritize harmony over honesty, making genuine connection elusive. Over time, it weakens your trust in your inner voice and normalizes people-pleasing, which can distort societal views of what it means to be truly authentic.

Conclusion

As you navigate a world where machines become family, remember that emotional outsourcing is like handing over your heart to a fragile glass statue—you must handle it with care. While technology can fill gaps and offer comfort, it’s essential to question whether these digital friends can truly understand your soul. In this delicate dance, you hold the power to decide what’s real and what’s just a shadow—protect your humanity from becoming a mere echo.