To spot deepfakes, look for unnatural eye movements, inconsistent facial features, and irregular blinking. Audio cues like strange pauses or voice distortions can also be signs. Examining metadata and digital footprints helps verify a video’s authenticity, but these can be manipulated. Tools and techniques have limitations, especially with sophisticated deepfakes. To confidently identify fakes, you need to understand trusted signals and stay aware of evolving detection methods—more details can guide your next steps.

Key Takeaways

- No single detection method guarantees 100% accuracy; combining visual, audio, and metadata analysis improves reliability.

- Inconsistent eye movements, unnatural micro-expressions, and mismatched lip syncing are strong visual deepfake indicators.

- Unusual audio cues like irregular pitch or robotic speech, alongside background noise discrepancies, suggest manipulation.

- Metadata inconsistencies, such as creation dates or device info, can help verify media authenticity but should be cross-verified.

- Always use multiple signals and remain cautious, as sophisticated deepfakes can bypass individual detection cues.

Deepfake and Image Forgery Detection: Cybersecurity, Multimedia Forensics, Image Manipulation (De Gruyter STEM)

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

What Are Deepfakes and Why Do They Matter?

Deepfakes are highly realistic manipulated videos or images created using artificial intelligence techniques, often making it difficult to distinguish them from genuine content. They can convincingly mimic real people, making it challenging to verify authenticity. This raises significant concerns for media literacy, as viewers need to develop skills to identify false content. Recognizing the signs of manipulation in digital media can help you stay vigilant against deceptive content. Understanding what deepfakes are helps you recognize their potential for misinformation, manipulation, or harm. The increasing sophistication of deepfake technology highlights the importance of image authenticity in verifying digital content. Ethical considerations also come into play, as creators and sharers of deepfakes must consider the impact on individuals’ privacy and reputation. As these videos become more sophisticated, staying informed about their existence and implications is vital. Being aware of deepfakes’ capabilities encourages critical viewing and responsible sharing, helping you navigate digital media responsibly. Additionally, developing media literacy skills enables viewers to better discern credible information from manipulated media. Incorporating advanced detection tools can further assist in identifying deepfakes and ensuring content integrity. Staying updated on AI-driven manipulation techniques is essential for understanding the evolving landscape of digital deception.

video authenticity verification tools

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

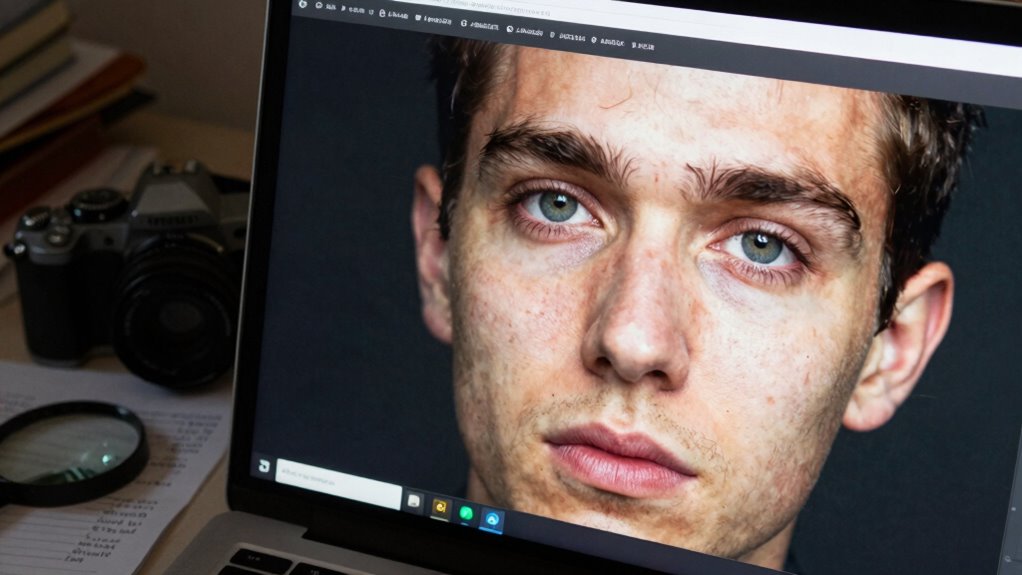

Key Visual Cues to Spot Deepfake Videos

You can often spot deepfakes by paying attention to inconsistent eye movements that seem unnatural or out of sync. Irregularities in facial details, like uneven skin texture or distorted features, also serve as warning signs. Recognizing these visual cues helps you better identify manipulated videos quickly and confidently. Additionally, understanding the skincare ingredients and their effects can help you distinguish genuine content from fabricated visuals that may lack realistic skin textures or exhibit unnatural glow. Being aware of deepfake detection signals enhances your ability to discern authentic visuals from manipulated ones more effectively. For example, Mad Tasting techniques can provide insight into subtle inconsistencies in audio-visual synchronization. Moreover, developing an understanding of visual authenticity cues rooted in reliable, well-researched methods increases your confidence in verifying the integrity of digital content. Paying attention to reliable technical indicators such as lighting inconsistencies and shadow errors further supports accurate detection.

Inconsistent Eye Movements

Inconsistent eye movements often stand out as a telltale sign of deepfake videos because artificial intelligence struggles to perfectly replicate natural gaze patterns. When watching a deepfake, you might notice that the eye movement appears unnaturally jerky or oddly synchronized, disrupting the flow of genuine gaze shifts. These videos often fail to mimic realistic eye behavior, such as smooth tracking or appropriate blinking patterns. Pay attention to how the eyes move in relation to the scene; if gaze patterns seem disconnected or inconsistent with the context, it’s a strong indicator of a deepfake. Authentic eye movement aligns seamlessly with facial expressions and head turns, making any deviation a red flag. Recognizing these subtle cues can help you distinguish real footage from manipulated content more effectively. Additionally, energy-efficient cloud servers can enhance the processing and detection of such subtle visual cues through advanced AI analysis, leveraging connected home fitness technologies to improve overall security and authenticity verification.

Irregular Facial Details

Irregular facial details often reveal deepfake videos because artificial intelligence struggles to replicate the subtle nuances of genuine facial features. You might notice facial asymmetry, where one side of the face looks slightly different from the other, or unnatural expressions that seem forced or inconsistent. Deepfakes often lack the natural muscle movements and micro-expressions that make real faces believable. These irregularities can include uneven blinking, mismatched lip movements, or unnatural eye contact. Such details are difficult for AI to perfect, so paying attention to facial symmetry and the authenticity of expressions helps you spot fakes. When faces appear oddly symmetrical or expressions seem exaggerated or inconsistent, you’re likely viewing a deepfake. Recognizing these irregular facial details is key to identifying manipulated videos. Additionally, understanding Hartsburg News can provide context and credibility that help in verifying authentic content, and being aware of deepfake detection signals enhances your ability to critically evaluate visual media. Being familiar with video authentication methods can further improve your detection skills. Recognizing authentic facial cues can also assist in distinguishing genuine footage from manipulated ones. Developing an eye for subtle facial irregularities can make a significant difference in spotting deepfakes more reliably.

Portable AI Hidden Camera Detector, AI-Powered Anti Spy Device,GPS Trackers &Listening Devices,Portable Rf Wireless Signal Scanner for Hotels,Travel & Home,6 Sensitivity Levels & 4 Detection Modes

6 𝙎𝙚𝙣𝙨𝙞𝙩𝙞𝙫𝙞𝙩𝙮 𝙇𝙚𝙫𝙚𝙡𝙨 & 4 𝘿𝙚𝙩𝙚𝙘𝙩𝙞𝙤𝙣 𝙈𝙤𝙙𝙚𝙨: This hidden camera detector features 6 adjustable sensitivity levels, letting you…

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

How to Detect Audio Manipulations in Deepfakes

Detecting audio manipulations in deepfakes requires a careful analysis of subtle acoustic cues that often go unnoticed. Audio forensic techniques help identify inconsistencies like unnatural pauses, irregular pitch, or unnatural background noise. Listen closely for anomalies in speech timing or voice quality, which may indicate manipulation. Voice synthesis technology, while advanced, sometimes produces artifacts such as robotic intonations or distorted consonants that stand out upon careful inspection. You can also compare the audio against known samples or original recordings to spot discrepancies. Pay attention to mismatched lip movements and background sounds, which may not align perfectly in manipulated recordings. Recognizing audio artifacts that deviate from natural speech patterns is crucial for accurate detection. Additionally, understanding sound quality cues can further improve detection accuracy, especially when evaluating recordings in different environments or devices. Incorporating knowledge of appliance testing and compatibility tips can help identify issues in recordings made across various equipment, ensuring more reliable analysis. Moreover, examining the frequency spectrum of the audio can reveal unnatural patterns typical of synthetic audio generation. Researchers have also developed tools that analyze spectral anomalies to improve detection precision, further aiding forensic investigations.

BRFOZAG Spy Camera Hidden Camera – 1080P Small Camera with Motion Detection – Nanny Cam for Home Indoor Security – 64GB Micro SD Card Included-No WiFi

【Smart Camera】 This USB camera looks like a usual USB phone charger, which can meet your charging or…

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

Using Metadata and Digital Footprints to Verify Media

You can often spot inconsistencies in media files by examining metadata, which reveals details about the file’s origin and history. Using metadata analysis techniques, you identify signs of tampering or suspicious modifications. Digital footprint verification further confirms whether the media matches its claimed source, helping you verify authenticity efficiently. Recognizing common cryptid sightings patterns may also provide context for evaluating unusual media claims. Additionally, understanding breed traits and typical behavioral cues can assist in assessing the credibility of certain visual evidence, especially when media involves animals or natural scenes. Applying style analysis methods can also uncover subtle stylistic inconsistencies that indicate alterations or forgeries. Being aware of wave and wind patterns can help detect subtle environmental inconsistencies in natural scene footage, and analyzing lighting conditions can further enhance your ability to spot digital manipulations.

Metadata Analysis Techniques

Metadata analysis techniques serve as essential tools in verifying media authenticity by examining the digital footprints left behind during file creation and editing. By scrutinizing metadata, you can uncover details like creation date, device information, and editing history that aren’t visible in the media itself. This metadata acts as a digital footprint, offering clues about whether the media has been manipulated or altered. You can use specialized tools to extract and analyze this data, helping you identify inconsistencies or anomalies indicative of deepfakes or tampering. Additionally, changes in odor characteristics can sometimes signal tampering or modification in media files, providing another layer of verification. Keep in mind, though, that metadata can be manipulated, so this method works best when combined with other verification techniques. Overall, metadata analysis provides a quick, non-invasive way to assess the media’s origin and integrity, especially when combined with digital forensic tools to enhance accuracy, and understanding how file creation leaves traces that can be traced back to specific devices or editing software. Moreover, awareness of common metadata manipulation techniques can help you better interpret the data and identify potential red flags in digital media.

Digital Footprint Verification

Building on metadata analysis techniques, verifying a media file’s digital footprint offers a thorough way to confirm its authenticity. You can do this by examining cryptographic signatures embedded in files, which verify they haven’t been altered. Additionally, analyzing how social media algorithms distribute the content can reveal inconsistencies—if a video suddenly gains abnormal popularity, it might be manipulated. Consider these steps:

- Check for cryptographic signatures to confirm origin.

- Review metadata for creation and modification timestamps.

- Analyze digital footprints across platforms to track sharing patterns.

- Cross-reference content with original sources to spot discrepancies.

Eye and Gaze Tracking: Can They Reveal Fakes?

Eye and gaze tracking have emerged as promising tools for detecting deepfakes, but their effectiveness isn’t absolute. By analyzing eye movement and gaze patterns, you can spot inconsistencies that might indicate a fake. Genuine videos typically show natural, fluid eye behavior, with gaze shifting appropriately based on context. Deepfakes often struggle to replicate these subtle cues accurately, leading to unnatural eye contact or fixed gaze points. However, sophisticated deepfakes are improving, making it harder to rely solely on eye and gaze analysis. While these signals can help flag suspicious content, they shouldn’t be your only method. Combining eye and gaze tracking with other detection techniques provides a more robust approach to identifying deepfakes. Relying solely on these cues might lead to missed fakes or false positives.

Top Tools for Deepfake Detection You Should Know

To spot deepfakes effectively, you need to be aware of the top tools available today. These include AI-based detection systems, blockchain verification methods, and visual analysis techniques, each offering unique strengths. Understanding how these tools work can help you better identify manipulated content and protect yourself from deception.

AI-Based Detection Tools

AI-based detection tools have become essential in the fight against deepfakes, offering rapid and often accurate assessments of manipulated media. These tools analyze subtle cues in synthetic media, particularly facial synthesis, to identify fakes. Here are top tools you should know:

- Deepware Scanner: Uses machine learning to detect facial synthesis and inconsistencies in deepfake videos.

- Microsoft Video Authenticator: Analyzes images and videos for signs of manipulation, highlighting areas of concern.

- Sensity AI: Specializes in detecting synthetic media by analyzing facial movements and visual artifacts.

- Deepfake Detection Challenge (DFDC) models: Offers open-source algorithms trained to spot deepfakes through pattern recognition.

These tools help you distinguish genuine content from manipulated media, boosting your confidence in digital authenticity.

Blockchain Verification Methods

Blockchain verification methods have emerged as a promising way to authenticate digital media and combat deepfakes by providing an immutable record of content origin and integrity. By leveraging blockchain verification, you can guarantee that digital signatures are securely attached to genuine media files, verifying their authenticity. This process involves recording metadata, timestamps, and signatures on a decentralized ledger, making it nearly impossible to alter or forge. When you access a piece of content, blockchain verification allows you to trace its provenance back to the original creator, reducing the risk of encountering manipulated media. These tools serve as a reliable foundation for verifying digital authenticity, helping you distinguish genuine content from deepfakes with increased confidence. Blockchain verification strengthens trust in digital media, offering a robust solution against misinformation.

Visual Analysis Techniques

While blockchain verification methods establish a trustworthy record of media origins, visual analysis techniques offer immediate, on-the-spot tools to identify deepfakes. You can look for telltale signs such as:

- Inconsistent facial expressions that don’t match the context or seem unnaturally exaggerated.

- Lighting inconsistencies across different parts of the face or background, indicating manipulation.

- Irregular blinking patterns or unnatural eye movements.

- Subtle distortions or artifacts around facial features when zoomed in.

These tools help you quickly assess authenticity by scrutinizing facial expression nuances and lighting consistency. By paying close attention to these details, you can better distinguish genuine footage from sophisticated deepfakes, making visual analysis an essential part of your detection arsenal.

Limitations of Current Deepfake Detection Methods

Despite advances in deepfake detection technologies, current methods face significant limitations that hinder their reliability. Many detection tools struggle with evolving deepfake techniques, making it easy for sophisticated fakes to bypass them. This creates challenges in verifying media authenticity, raising ethical considerations about trust and misinformation. Additionally, false positives and negatives can lead to unjust accusations or overlooked deception, complicating legal implications. You must recognize that no single technique guarantees accuracy, and overreliance can give a false sense of security. As deepfakes improve, detection methods must continuously adapt, but their current limitations mean you should approach their results with caution. Understanding these shortcomings helps you navigate the complex landscape of digital media more responsibly.

Practical Tips for Evaluating Media Credibility

To effectively evaluate media credibility, you need to develop a critical eye and apply practical strategies. Strengthen your media literacy by questioning the source and considering its motives. Improve your fact-checking skills with these tips:

- Verify information through reputable fact-checking websites.

- Cross-reference details across multiple trusted outlets.

- Examine the source’s credibility, author credentials, and publication date.

- Look for signs of manipulation, like inconsistent visuals or audio anomalies.

Emerging Technologies and Future Trends in Deepfake Detection

Emerging technologies are revolutionizing deepfake detection by providing more sophisticated and accurate tools. Advances in AI and machine learning enable systems to analyze complex signals within synthetic media, making it harder for deepfakes to slip through undetected. Future trends include real-time detection methods that can analyze videos instantly, reducing the spread of malicious content. Researchers are also exploring multimodal approaches, combining visual, audio, and contextual cues for better accuracy. As these technologies develop, ethical considerations become increasingly important, especially around privacy and consent when analyzing media. You’ll see ongoing innovation aimed at staying ahead of increasingly realistic deepfakes, but balancing technological progress with ethical responsibility remains essential to ensuring trustworthiness in digital media.

Common Mistakes When Checking Media Authenticity

One common mistake when checking media authenticity is relying solely on superficial appearances, such as visual or audio quality, without digging deeper into the content’s context or metadata. This approach ignores the importance of media literacy and fact checking skills. To improve your accuracy, consider these steps:

- Examine the metadata for inconsistencies or signs of editing.

- Cross-reference the content with reputable sources to verify its origin.

- Analyze the context—does the message align with known facts or events?

- Be cautious of sensational headlines or suspiciously polished visuals, which may signal manipulation.

Staying Vigilant: Building Digital Literacy in a Fake News World

In a world flooded with misinformation, developing strong digital literacy skills is essential to distinguish fact from fiction. You need to be proactive in enhancing your media literacy by questioning sources and verifying information before accepting it as truth. Building online skepticism isn’t about distrusting everything but about critically analyzing content, especially when it looks suspicious or too sensational. Stay alert for signs of deepfakes and manipulated images or videos, and learn to cross-check with reputable outlets. Regularly update your knowledge about digital tools and fake news tactics. By cultivating these skills, you’ll become more resilient to misinformation and better equipped to navigate the complex media landscape confidently. Vigilance and education are your best defenses against deception in the digital age.

Frequently Asked Questions

How Do Deepfakes Evolve With Advancing AI Technology?

As AI advances, deepfakes become more sophisticated, increasing synthetic realism and making them harder to detect. You’ll find future deepfakes mimicking real individuals more convincingly, posing ethical dilemmas around misinformation and privacy. Staying ahead requires improving detection methods and understanding these evolving signals, so you can better identify authentic content amidst increasingly realistic fakes. The challenge is balancing technological progress with ethical considerations to prevent misuse.

Can Deepfake Detection Methods Keep up With New Manipulation Techniques?

Like a chess game, deepfake detection methods are constantly strategizing to stay ahead of new manipulation techniques. While advances in synthetic authenticity challenge detection, researchers continually develop smarter algorithms for manipulation detection. You can trust that, with innovation and vigilance, these methods will adapt and improve, but staying alert remains vital. It’s a continuous race — and you’re the key player in keeping the digital world honest.

Are There Legal Consequences for Creating or Sharing Deepfakes?

Yes, creating or sharing deepfakes can lead to legal liabilities, especially if they cause harm or violate privacy rights. Many jurisdictions are establishing regulatory frameworks to address these issues, making it illegal to produce or distribute malicious deepfakes without consent. You could face lawsuits, fines, or criminal charges if your actions breach these laws. Stay informed about local regulations to avoid serious legal consequences when dealing with deepfakes.

How Effective Are Current Deepfake Detection Tools Across Different Media Types?

You’ll find that current deepfake detection tools vary in effectiveness across media types. They often analyze visual artifacts and audio inconsistencies to identify fakes, but these signals aren’t foolproof. For images, tools perform well, catching subtle manipulations. However, videos and audio can be more challenging, as sophisticated deepfakes minimize artifacts and inconsistencies. So, while detection tools are helpful, they aren’t completely reliable across all media types.

What Role Do Social Media Platforms Play in Mitigating Deepfake Spread?

You play a vital role in stopping deepfakes on social media by supporting platform policies and practicing user verification. For example, platforms like Facebook now require verified accounts for high-profile pages, reducing fake content spread. When you report suspicious videos, you help platforms act swiftly. Your vigilance, combined with platform efforts, makes it harder for deepfakes to circulate unchecked, safeguarding the online community from misinformation.

Conclusion

By building basic skills and staying skeptical, you can better spot deepfakes and dodge deception. Focus on facial flickers, flickering lights, and suspicious signals, while staying savvy with metadata and digital footprints. Don’t forget to question what you see and hear—because digital deception is evolving. Stay sharp, stay skeptical, and safeguard yourself in this sea of simulated signals, so fake faces and fabricated figures never fool you again.