Hyper-realistic immersive sound specialist DSpatial is proud to announce the availability of the all-new Reality available as four varied versions (VR, ONE, Studio, and Builder) respectively priced according to their ‘upwardly mobile’ specification and collectively taking its immersive sound specialism to the next level with the only existing object-based production suite specifically for Avid’s Pro Tools® industry-standard audio production software, shipping in AAX format for direct integration, including rigs running in combination with Avid’s S6 console (for mixing Dolby Atmos®), while workflow resolutely remains respected with only one mix for all delivery formats without downmixing, independent of equipment and media for film, music, television, XR (‘extended reality’), or even theme parks and planetariums.

DSpatial all new Reality 2.0

Releasing Reality Builder in 2018 as the first ever audio mixing system compatible with all existing immersive and non-immersive formats following 10 years of intensive research, DSpatial literally turned film, music, television, theme parks, planetariums, and even XR — AR (Augmented Reality), MR (Mixed Reality), and VR (Virtual Reality) — audio mixing dreams into reality with its object-based, integrated, seamless solution for immersive creation that redefined classical concepts of reverb, panning, and mixing, unifying them into a single space, and providing a new paradigm for sound production and mixing.

More meaningfully, this enabled engineers to concentrate on the creative process since Reality Builder — built upon a proprietary physical modelling engine that allows users to realistically recreate real spaces and locate, move, and rotate sound sources in real- time, transporting the listener to a new, virtual yet realistic dimension, thereby magnifying the aural experience and recreating natural soundscapes that rival reality — provided them with a tool to create and manipulate sound in a completely new way as the only existing object-based production system available for Pro Tools.

Think of it this way: with Pro Tools being designed as a track-based system, transforming it into a fully object-based system for the whole creative and delivery workflow was a remarkably complicated task, though the tenacity of DSpatial duly paid off. Other object-based systems are just used for delivery, and use external hardware to manage and mix the objects — itself implying a total dependence on expensive, heavy-duty proprietary hardware. However, DSpatial’s singular solution is used inside Pro Tools for the complete creation and production process. The position of DSpatial objects are saved as standard Pro Tools automation, allowing the mix to be modified as many times as necessary until the final rendered mix is completed.

Crucially, this means that the work can be started and finished inside Pro Tools. There is only metadata, so engineers can start work at home and continue it in a large studio later. Little wonder, then, that Reality Builder found favor in Hollywood film mixing circles — closed shop as that arguably is — with the likes of Oscar-nominated Vincent Arnardi (Amélie) and fellow Oscar nominee Ron Bartlett (Blade Runner 2049) both citing DSpatial’s advancements as an indispensable part of their creative toolkit. It is especially exemplified by Mark Mangini, whose practical application of the system in the oratory composition ‘Last Whispers’ landed him an Oscar for Mad Max: Fury Road.

Fast-forward to today, and Reality 2.0 has been natively conceived for immersive 3D production with no compromises involved. Instead of being designed around a user interface mirroring old audio hardware, it is rooted in the physical world and how humans interact with it, automatically and meticulously managing an array of complex tasks that, until now, were exclusively executed via manual processes. But better still, it is format agnostic. Only one mix is necessary for any existing or future delivery formats independently of equipment and media for film, music, television, or XR — no downmixing required, in other words. With Reality 2.0, mix engineers are at last able to exchange totally compatible sessions between different studios, each one with a different speaker format or even just headphones — and all without losing any spatial and immersive information. Indeed, there are no compatibility limits! Literally everything content creators, mixers, and producers need is now integrated into the same workflow, so it is safe to say that the format war is officially over as all mixed tracks are directly compatible with all immersive or non-immersive formats alike, including Atmos®, Auro3D®, DTS®, NHK®, Sony SDDS®, Ambisonics — FuMa and ambiX (inputs) and FOA FuMa, FOA ACN, or 2nd HOA ACN (outputs) — and binaural for cinematic VR and 360o video. So sound designers could start working in, say, stereo, 5.1, or even binaural, and their sessions can be played back in an Atmos® stage while all spatial information is retained. As all positions and related data are recorded in Pro Tools automation, work from different sound designers could also be aggregated with Pro Tools’ Import Session Data feature.

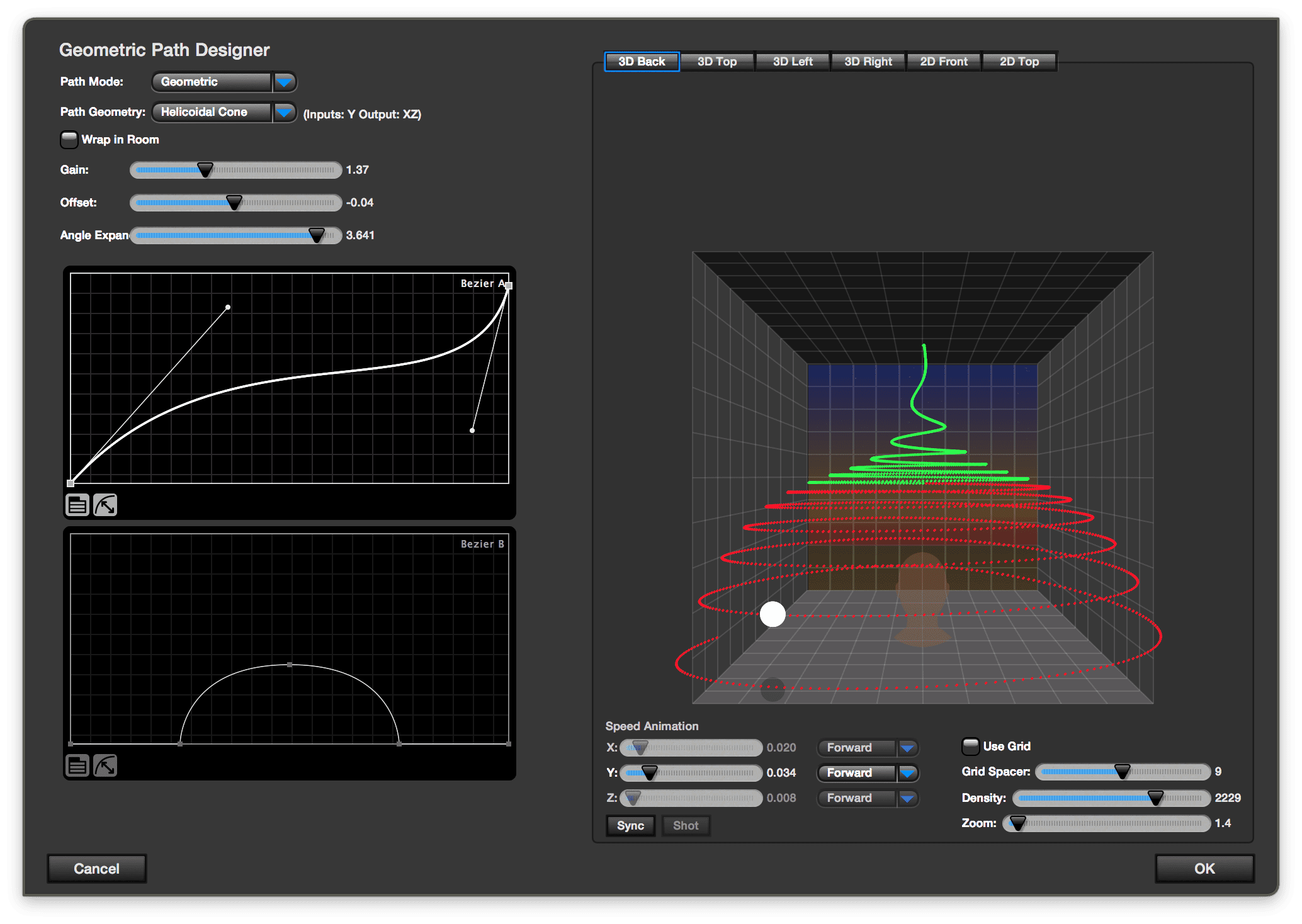

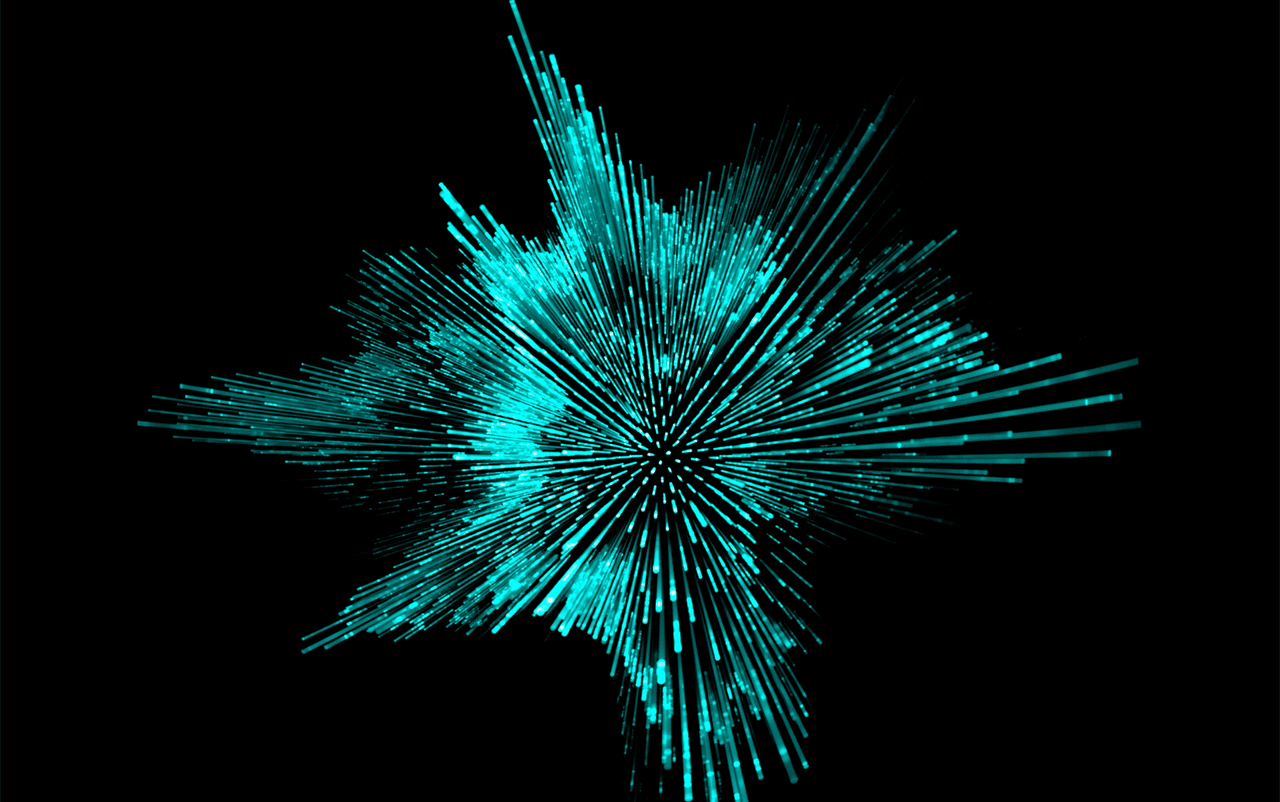

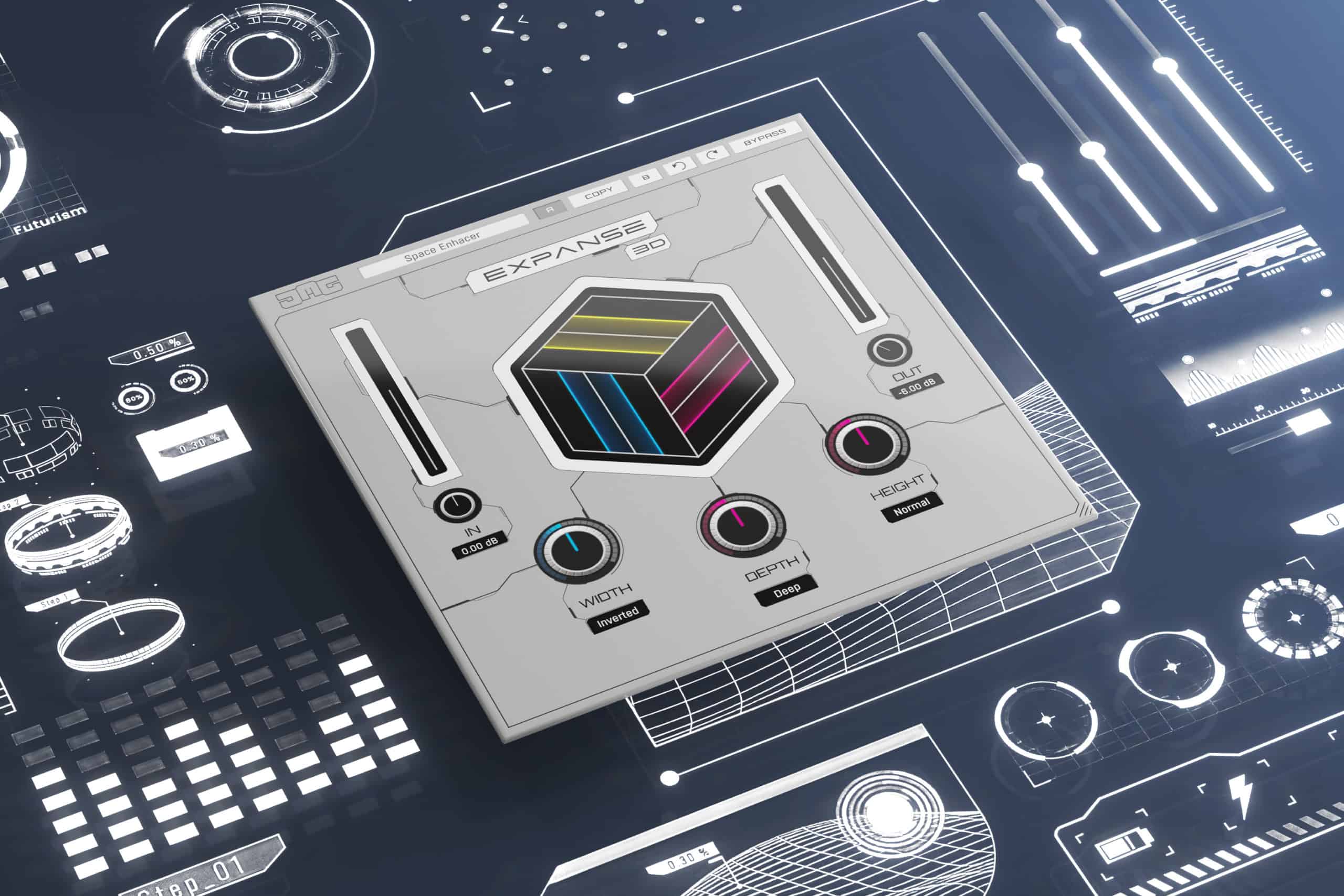

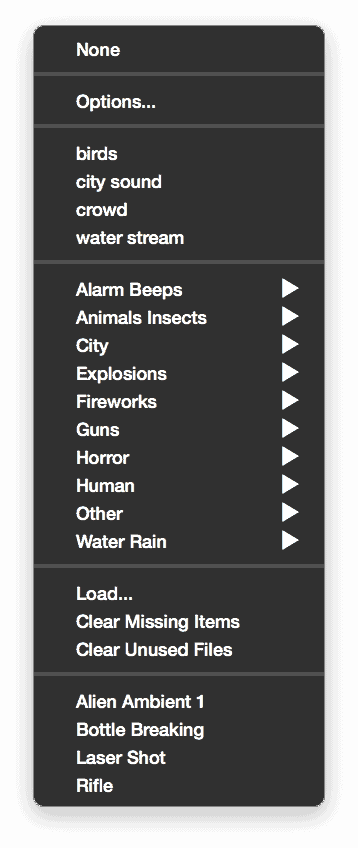

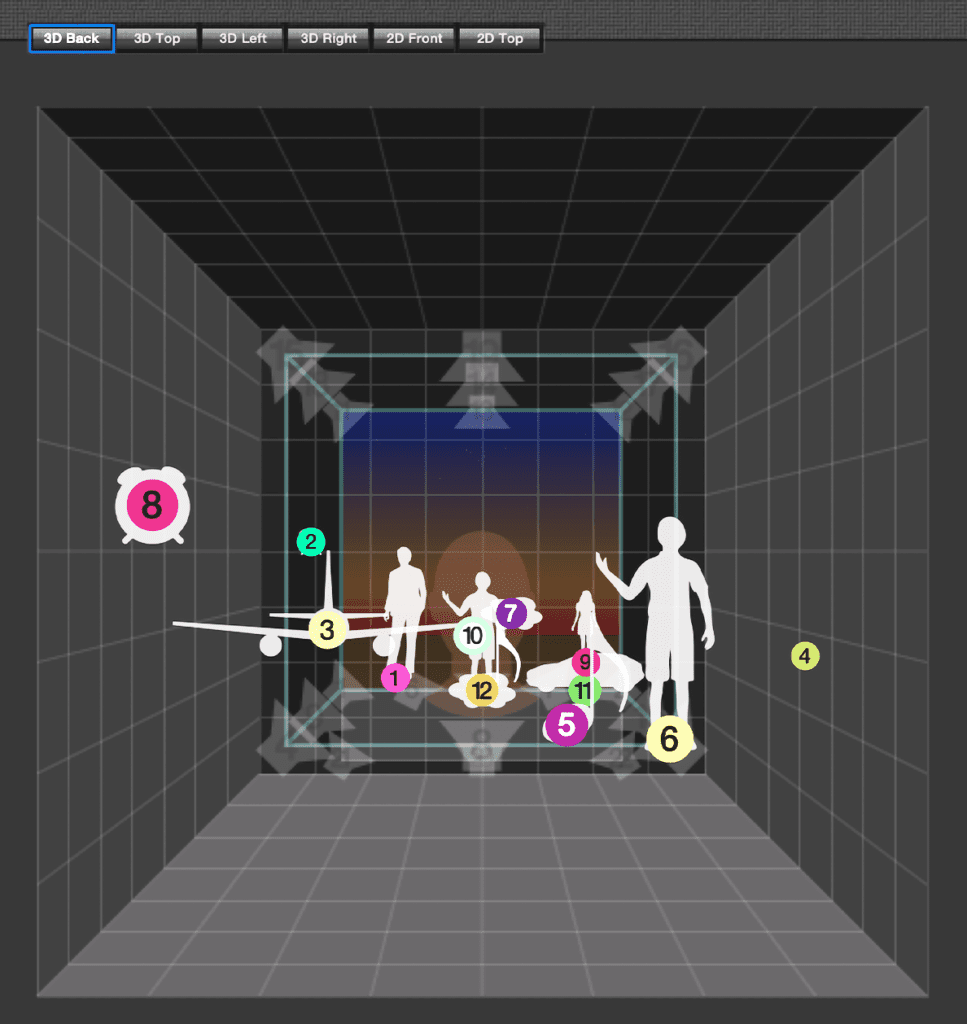

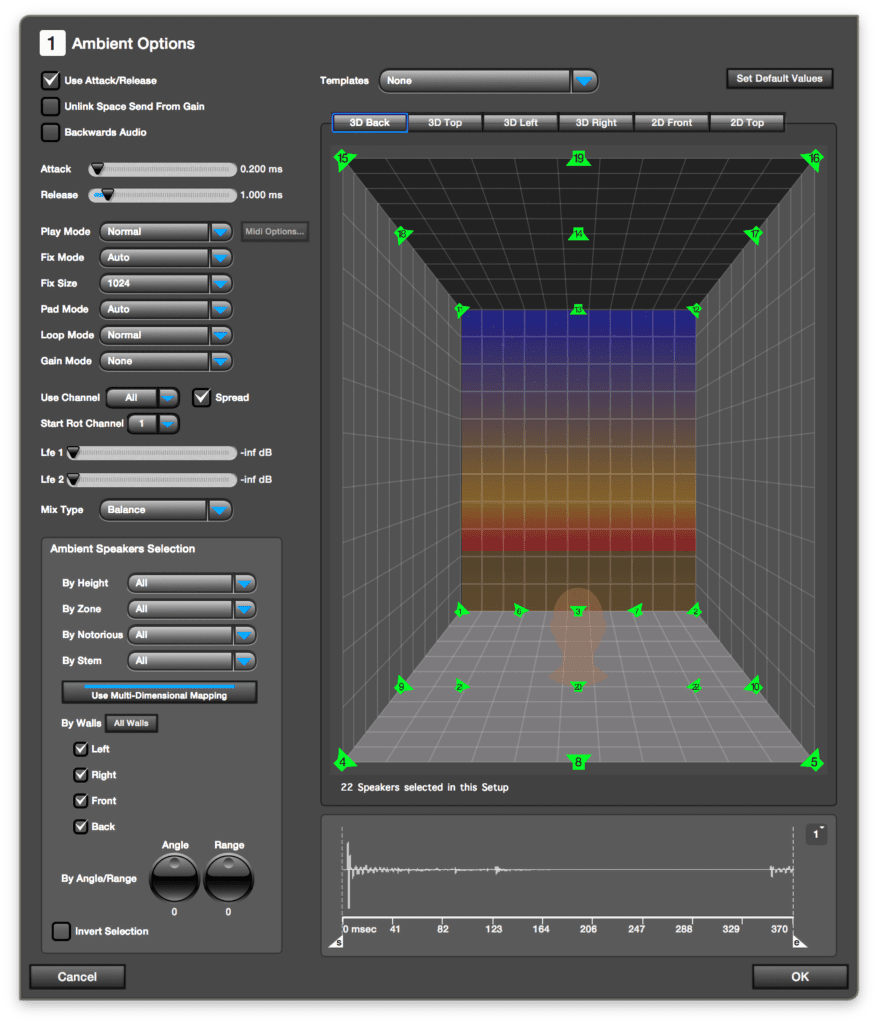

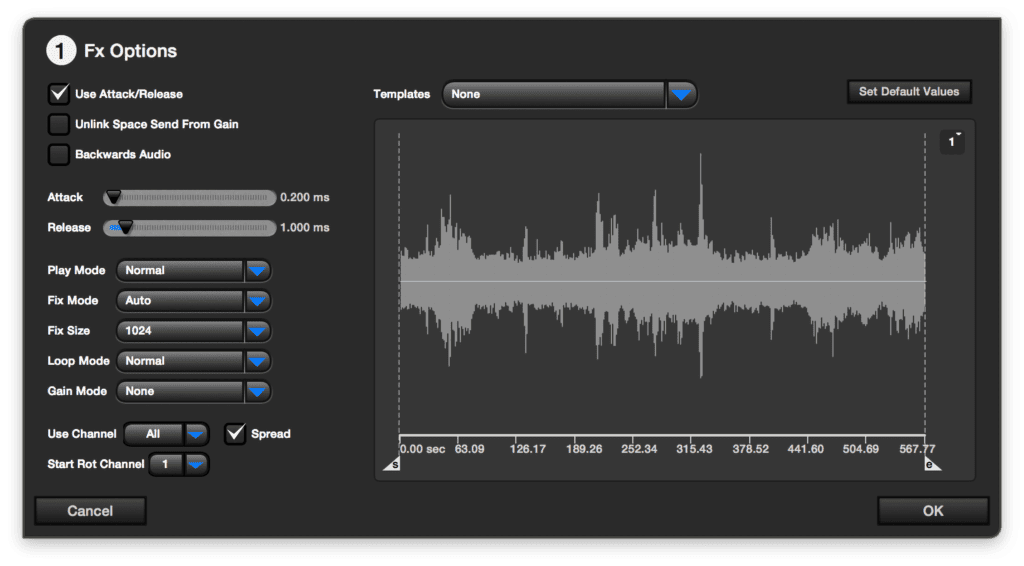

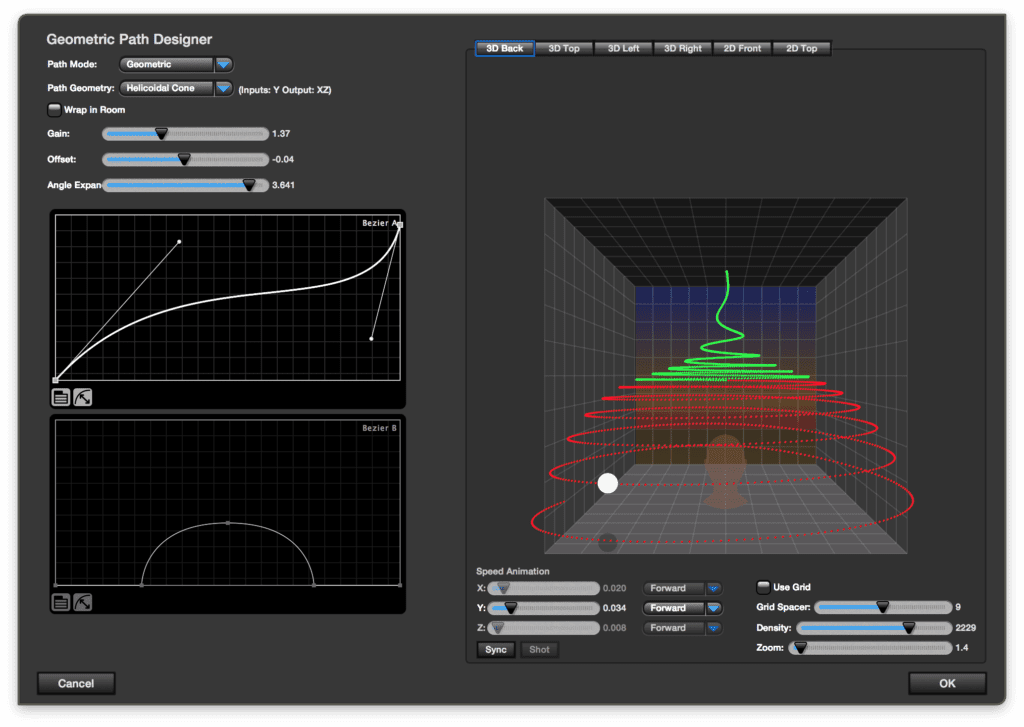

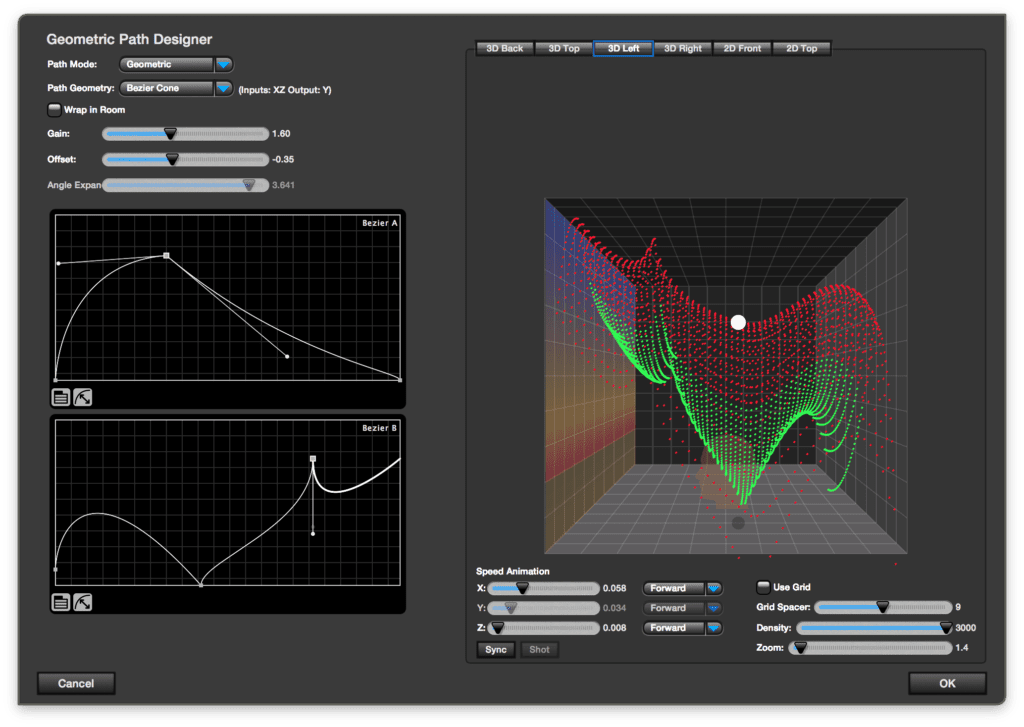

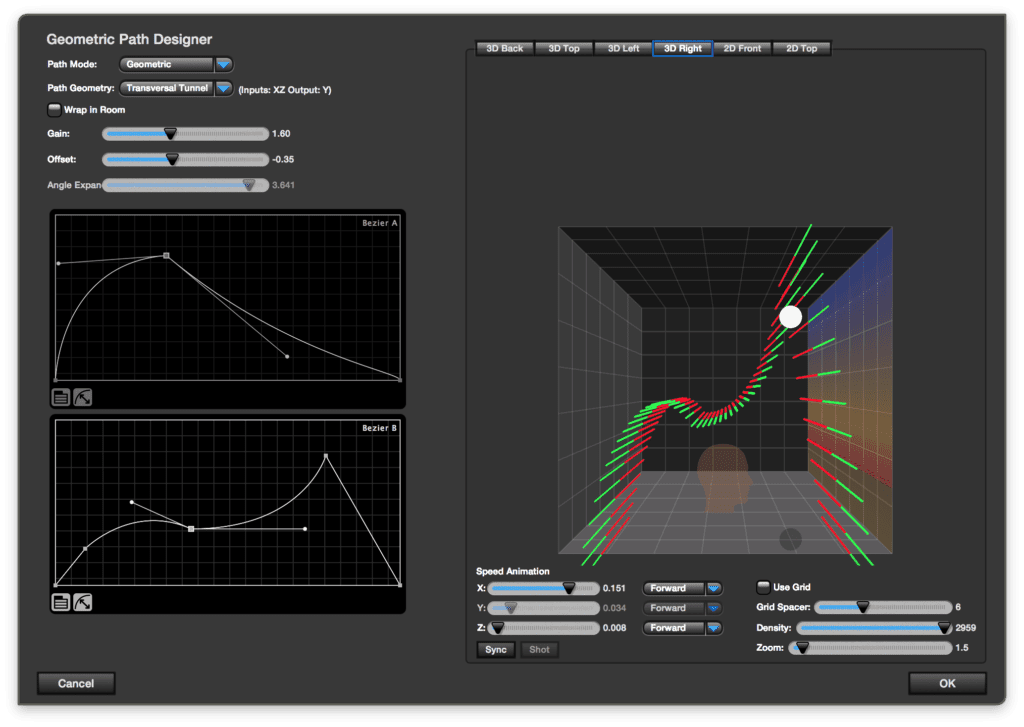

Fortunately for all concerned, Reality 2.0 users can simply start working with standard AAX plug-ins inserted on each input channel. Cleverly, those plug-ins provide most of the available functions for working seamlessly within the classic Pro Tools workflow. With that being said, then, this means that Proximity, Inertia, and Doppler parameters — as well as reverberation send, Immersive Tools, and more — are all controllable via the same panel with its own spatial location to effectively become 3D multi-generated audio with all automation possibilities on tap. Indeed, Immersive Tools and Ambients allow broadcasters, content creators, and producers to easily recreate hyper-realistic sonic landscapes at a touch. Moreover, multiple predefined and programmable sound trajectories can be automatically programmed, and an extensive list of geometric shapes and a library of ambient sounds are provided. The limit literally is… imagination!

Insofar as putting Reality 2.0 into its rightful (pole) position as the only existing object-based production suite specifically for Avid’s Pro Tools industry-standard audio production software, several exclusive features are well worth highlighting here, starting with recreating real and unreal spaces in real-time with a simple touch; indeed, indoor and outdoor spaces can be simulated with ease, and a library of more than 200 spaces is included. Reality 2.0 readily recreates the real sound of proximity and distance from the listener’s perspective; positions of objects are not just simulated with panning, but instead make use of all available loudspeakers to reproduce complex reflections within the sound field. Furthermore, Reality 2.0 enables engineers to easily and realistically locate, move, rotate, collapse, or explode sound sources in real-time — even behind the walls of the listening room! Reflection, refraction, diffraction, and acoustic scattering produced by walls, objects, and doors are exquisitely simulated down to the finest detail. Problematic properties like inertia and the Doppler effect are created automatically by simply moving the sound source, so no unpleasant phasing and other unruly artefacts are audible! Additionally, DSpatial’s proprietary physical modelling engine includes up to 96 real-time convolutions, making it the most powerful convolution reverb in the world! Whether for dialog, foley, sound design, or any other immersive or VR ambiences, the world’s first true IR (Impulse Response) modeller is also at Reality 2.0 users’ fingertips; DSpatial’s 96-channel immersive convolution reverb includes the first ever N-channel Impulse Response modeller — ultra-realistic and immersive IRs for up to 48 channels! Cunningly smooth, two complete reverberations are instantiated simultaneously in two separate layers; that’s two instances of 48 convolutions occurring at once, facilitating fluctuations in reverb to then be programmed and edited with Pro Tools automation. And last but not least, DSpatial software supports eight professional-grade touch screen models currently, with up to 10-point multi-touch — perfect for positioning sources in an equirectangular view while simultaneously programming distances in the top view.

All advancements, of course, clearly come at a cost, yet DSpatial admirably acknowledges that not everyone necessarily needs to mix up to 48.2, which is why Reality 2.0 is available as VR, ONE, Studio, and Builder versions respectively priced according to their ‘upwardly mobile’ specification, as outlined online in its convenient comparison chart (here: http://dspatial.com/features-list/). After Apple’s move to include head tracking in its new AirPods Pro in-ear headphones, even the entry-level Reality VR is perfectly positioned to address a growing need for binaural and Ambisonics content in today’s increasingly immersive sound world.

![DSpatial Levels up Reality to 2.0 12 WavePad Free Audio Editor – Create Music and Sound Tracks with Audio Editing Tools and Effects [Download]](https://m.media-amazon.com/images/I/B1HPw+BmlXS._SL500_.png)

WavePad Free Audio Editor – Create Music and Sound Tracks with Audio Editing Tools and Effects [Download]

Easily edit music and audio tracks with one of the many music editing tools available.

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

Pricing and Availability

Reality VR is available to purchase as an object-based production suite in AAX format for Pro Tools (11, 12, Ultimate) on macOS (10.9 and above) — with support for Ambisonics and binaural outputs, 100 inputs (mono), and two LFE channels, amongst many more standout features — for €495.00 EUR from DSpatial’s online Store.

Reality ONE is available to purchase as an object-based production suite in AAX format for Pro Tools (11, 12, Ultimate) on macOS (10.9 and above) — with additional support for up to 7.1 speakers/outputs amongst many more standout features — for €995.00 EUR from DSpatial’s online Store.

Reality Studio is available to purchase as an object-based production suite in AAX format for Pro Tools (11, 12, Ultimate) on macOS (10.9 and above) — with additional support for up to 13.2 speakers/outputs, three speaker output layers, 20 bed inputs (up to 7.1.2), Dolby Atmos, Auro 3D, Space/top-rear, and Speaker set designer, amongst many more standout features — for €1,495.00 EUR from DSpatial’s online Store.

Reality Builder is available to purchase as an object-based production suite in AAX format for Pro Tools (11, 12, Ultimate) on macOS (10.9 and above) — with additional support for up to 48.2 speakers/outputs, four-speaker output layers, V.O.G. (Voice Of God) output, NHK 22.2, and an exclusive Room Builder, amongst many more standout features — for €2,595.00 EUR from DSpatial’s online Store.

Professional Audio Mixer, Phenyx Pro Sound Console w/USB Audio Interface, 4-Channel Sound board DJ Mixer w/Stereo Equalizer, 16 DSP Effects, suitable for Stage, Live Gigs, and Karaoke (PTX-15)

BROAD CONNECTIVITY: The audio mixer features four mono XLR and line combo inputs, allowing for seamless connection to…

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

Yamaha TF5 32-Channel Digital Mixing Console

High input capacity and fader count makes this powerful console ideal for larger applications.

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

Abacus Brands Virtual Reality ESPN Gift Box – Illustrated Interactive VR Book and STEM Learning Activity Set

EXPERIENCE SPORTS IN VIRTUAL REALITY! Dive into the world of sports like never before with immersive experiences in…

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.